An administrator has a Nutanix Files deployment hosted on an AHV-based Nutanix cluster, scaled out to four FSVMs hosting several department shares. In the event of a ransomware attack, files need to be quickly recovered from a self-hosted snapshot.

How can this be accomplished?

Configure an Async DR Protection Domain.

Install NGT and enable self-service restore.

Configure a DR Availability Zone.

Use File Analytics to enable self-service restore.

Self-Service Restore (SSR) requires Nutanix Guest Tools (NGT) installed on client VMs. SSR allows end users to directly restore files/folders from snapshots via Windows Previous Versions or macOS Time Machine, enabling rapid ransomware recovery without IT intervention.

Option A/C: Async DR and Availability Zones are for disaster recovery (site-level), not granular file recovery.

Option D: File Analytics provides insights but cannot enable restores.

Which two protocols can be enabled during a Nutanix Files installation? (Choose two.)

S3

NFS

SMB

iSCSI

During a Nutanix Files installation, the two protocols that can be enabled areNFS (Network File System)andSMB (Server Message Block). Nutanix Files is a scale-out file storage solution designed to provide file-sharing services, supporting both NFS for Linux/Unix environments and SMB for Windows environments.

TheNutanix Unified Storage Administration (NUSA)course states, “Nutanix Files supports NFS and SMB protocols, which can be enabled during the installation and configuration of a file server to provide file-sharing services for diverse workloads.” NFS is typically used for Unix/Linux clients, while SMB is used for Windows clients, allowing Nutanix Files to serve a wide range of applications and users.

TheNutanix Certified Professional - Unified Storage (NCP-US)study guide further clarifies that “during Nutanix Files deployment, administrators can enable NFS and SMB protocols based on the needs of the environment, ensuring compatibility with both Linux and Windows clients.” These protocols are configured at the file server level, and shares can be created with either or both protocols enabled.

The other options are incorrect:

S3: The S3 protocol is specific to Nutanix Objects, an object storage solution, and is not supported by Nutanix Files, which focuses on file-level storage.

iSCSI: The iSCSI protocol is used for block storage and is supported by Nutanix Volumes, not Nutanix Files, which is designed for file-sharing protocols like NFS and SMB.

The NUSA course documentation emphasizes that “Nutanix Files installation allows the administrator to select NFS and SMB protocols to meet the file-sharing requirements of the organization, ensuring broad compatibility across client environments.”

Question:

A user with Edit Buckets permission has been tasked with deleting old Nutanix Objects buckets created by a former employee.

Why is this user unable to execute the task?

User is only able to delete buckets assigned to them.

The buckets don't have Object Versioning enabled.

The buckets don't have a Lifecycle Policy associated.

User does not have the Delete Buckets permission.

In Nutanix Objects,bucket management permissionsare granularly controlled. TheEdit Bucketspermission allows a user tomodify bucket configurations(such as policy changes, tagging, and settings), but it doesnotgrant the ability todeletethe bucket.

From the NUSA training:

“The Delete Buckets permission is separate from Edit Buckets. Users with Edit Buckets can change configurations but cannot remove the bucket itself.”

Thus, the user’s inability to delete buckets stems fromlacking the explicit Delete Buckets permission.

What should the administrator do to satisfy the configuration requirements?

Configure Lifecycle rules with enabled tiering for AWS S3 and Objects instance.

Configure Lifecycle rule with enabled tiering for AWS S3 and replication for Objects instance.

Configure Lifecycle rule with enabled replication for AWS S3 and tiering for Objects instance.

Configure Lifecycle rules with enabled replication for AWS S3 and Objects instance.

To satisfy the configuration requirements for managing data lifecycle in Nutanix Unified Storage, the administrator shouldconfigure Lifecycle rules with enabled tiering for AWS S3 and Objects instance. Nutanix Data Lens, which integrates with Nutanix Objects and supports tiering to cloud storage like AWS S3, allows administrators to define lifecycle rules to automatically tier data to cost-effective storage based on access patterns or age.

TheNutanix Unified Storage Administration (NUSA)course explains that “Nutanix Data Lens enables lifecycle management through tiering policies that move data from Nutanix Objects to cloud storage, such as AWS S3, to optimize storage costs.” Lifecycle rules in Data Lens can be configured to tier infrequently accessed data to AWS S3, which supports tiering to storage classes like S3 Standard-Infrequent Access or S3 Glacier.

TheNutanix Certified Professional - Unified Storage (NCP-US)study guide states that “lifecycle rules in Nutanix Data Lens are used to configure tiering for Nutanix Objects, allowing data to be moved to AWS S3 for long-term storage or archival.” This applies to both Nutanix Objects and AWS S3, as Data Lens supports tiering policies for both environments to ensure efficient data placement.

The other options are incorrect:

Configure Lifecycle rule with enabled tiering for AWS S3 and replication for Objects instance: Replication is not a primary function of lifecycle rules in Nutanix Data Lens for Nutanix Objects. Replication is typically handled by other mechanisms, such as Smart DR or bucket replication, not lifecycle rules.

Configure Lifecycle rule with enabled replication for AWS S3 and tiering for Objects instance: Lifecycle rules in Data Lens focus on tiering, not replication, for Nutanix Objects. AWS S3 replication is a separate feature that is not managed through Data Lens.

Configure Lifecycle rules with enabled replication for AWS S3 and Objects instance: Lifecycle rules in Data Lens do not support replication for either AWS S3 or Nutanix Objects; they are designed for tiering.

The NUSA course documentation notes that “Nutanix Data Lens lifecycle rules enable tiering to AWS S3 and other cloud storage, ensuring data is stored cost-effectively while remaining accessible, making tiering the primary mechanism for lifecycle management.”

An administrator has determined that adding File Server VMs to the cluster will provide more resources.

What must the administrator validate so that the new File Server VMs can be added?

Ensure network ports are available.

Sufficient nodes in the cluster is greater than current number of FSVMs.

Sufficient storage container space is available to host the volume groups.

Ensure Files Analytics is installed.

Comprehensive and Detailed Explanation from Nutanix Unified Storage (NCP-US) and Nutanix Unified Storage Administration (NUSA) course documents:

In the context of expanding Nutanix Files (which is the file services capability of Nutanix Unified Storage), adding additionalFile Server VMs (FSVMs)to the cluster allows the file service to scale out and provide more resources for file services workloads, including performance and capacity improvements.

The Nutanix Files architecture involves deploying FSVMs that are distributed across the cluster nodes. Each FSVM handles file protocol operations and interacts with the underlying Nutanix Distributed Storage Fabric (DSF).

Here’s what’s critical when adding new FSVMs:

Sufficient Cluster Nodes Requirement:The Nutanix Unified Storage Administration (NUSA) course emphasizes that thenumber of FSVMs cannot exceed the number of physical nodes in the cluster. This is because each FSVM is deployed as a VM on a physical node, and Nutanix best practices require that FSVMs be spread out evenly across available nodes for performance, load balancing, and resiliency. Therefore, you must ensure:

“The number of nodes in the cluster must be greater than or equal to the number of FSVMs you plan to deploy.”

This ensures that FSVMs are properly balanced and have the physical resources they need for optimal operation.

Network Ports:While ensuring that appropriate network ports are configured is important for the operation of Nutanix Files (including communication with clients via SMB/NFS and integration with Prism), it isnotthe gating factor for adding new FSVMs. The critical factor is theavailable cluster nodes.

Storage Container Space:Storage container space is also essential for file data storage, but this is not a direct requirement when simply adding FSVMs. FSVMs use the existing DSF storage, and as long as there is available storage capacity overall, adding FSVMs does not require validating specific volume group space.

Files Analytics:Files Analytics is an optional feature that provides advanced analytics for file shares, such as usage patterns and security insights. It isnot requiredto add new FSVMs.

Design Best Practices:In the NUSA course, administrators are taught to always validate the number of cluster nodes first before deploying additional FSVMs. This ensures that the cluster can accommodate the new FSVMs without causing resource contention or violating best practice guidelines for balanced and resilient file server deployments.

Resilience and High Availability:Because FSVMs are distributed across the physical cluster nodes, having more nodes than FSVMs ensures that if a node fails, the FSVMs can failover to other available nodes. This helps maintain the high availability of file services.

In summary, while other factors like network ports, container space, and analytics capabilities play roles in the broader operation and management of Nutanix Files, theabsolute requirement for adding FSVMs is ensuring that there are enough cluster nodes to host them. This ensures compliance with design best practices for scalability and resilience, as emphasized in the official Nutanix training courses.

Question:

Which two URLs must Prism Central have access to, in an online deployment, for a Nutanix Objects server? (Choose two.)

download.nutanix.com

portal.nutanix.com

kubernetes.io

docker.io

In the Nutanix Unified Storage architecture, Nutanix Objects is a service that leverages container-based deployment for its microservices architecture. When deploying Objects inonline mode, Prism Central (which orchestrates the deployment) needs todownloadthe container images and additional software artifacts directly from Nutanix and trusted external registries.

download.nutanix.com:This is Nutanix’s primary repository for all official Nutanix software artifacts, including Objects installation packages and associated dependencies. In the official NUSA deployment module, it states:

“Prism Central must be able to reach download.nutanix.com to retrieve Objects binary packages and installation files. This ensures that Objects components are properly deployed and integrated into the cluster environment.”

docker.io:Nutanix Objects uses containerized microservices (e.g., object metadata, S3 gateway) that are packaged as Docker images. The deployment processpulls these images directly from docker.io, which is the default container registry for Docker images. The NUSA course explicitly mentions:

“During the Objects deployment, container images are pulled from docker.io. Prism Central must have connectivity to docker.io to ensure all components of Objects are downloaded and deployed successfully.”

portal.nutanix.com and kubernetes.io:

portal.nutanix.comis used for documentation and support but is not needed for direct deployment of Objects.

kubernetes.iois also not required since Nutanix Objects uses its own container orchestration within the Nutanix platform, not Kubernetes from the internet.

Thus, for an online Objects deployment, themandatory external dependenciesare:

download.nutanix.com

docker.io

Question:

Which statement is true regarding Self-Service Restore?

Supports 15 minute snapshots.

Does not require NGT on the VM.

Supports Windows and Linux.

Supported with a Starter license.

Self-Service Restore (SSR)in Nutanix Files is a feature that allows end users or administrators torestore previous file versionsdirectly from share snapshots without requiring direct administrator intervention.

Key details from the NUSA training:

Does not require Nutanix Guest Tools (NGT):

“SSR operates entirely at the file server share level, leveraging share snapshots created by the Nutanix Files service. It does not depend on NGT or VM-level backups, which simplifies deployment and reduces dependencies.”

15-minute snapshots:

“While Nutanix supports snapshot intervals down to 1 hour, the minimum interval is not typically 15 minutes for standard file share snapshots.”

Windows and Linux:

“SSR is primarily supported for Windows SMB shares. NFS/Linux-based shares do not integrate with SSR in the same manner.”

Starter license support:

“SSR is part of advanced Nutanix Files functionality not included in the Starter license tier.”

Thus, the definitive statement:Does not require NGT on the VM.

When hardening the network for Nutanix Objects, which is the only network endpoint that should be exposed to users?

S3

virbr0

eth0

OOB

When hardening the network for Nutanix Objects, the **S3 endpoint** is the only network endpoint that should be exposed to users. Nutanix Objects is an object storage solution that provides an S3-compatible API for accessing and managing objects. The S3 endpoint is the designated interface through which users and applications interact with Nutanix Objects, typically over HTTPS to ensure secure data transfer.

According to the **Nutanix Unified Storage Administration (NUSA)** course, network hardening for Nutanix Objects involves restricting access to only the necessary endpoints to minimize the attack surface. The S3 endpoint, which operates over port 443 (HTTPS) or port 80 (HTTP, though HTTPS is recommended for security), is the primary entry point for client interactions. Exposing only this endpoint ensures that users can access object storage services while other internal or management interfaces remain protected.

The **Nutanix Certified Professional - Unified Storage (NCP-US)** study guide emphasizes that Nutanix Objects is designed to segregate user-facing traffic from internal system traffic. The S3 endpoint is configured during the deployment of Nutanix Objects and is associated with a virtual IP address (VIP) or DNS name that resolves to the object store. To harden the network, administrators should configure firewalls and network security groups to allow traffic only to the S3 endpoint, blocking access to other interfaces such as management or internal network endpoints.

The other options are not suitable for user exposure:

- **virbr0**: This is a virtual bridge interface typically used for internal virtualization networking (e.g., in KVM-based environments). It is not a user-facing endpoint and should not be exposed, as it is used for internal communication between virtual machines or services.

- **eth0**: This refers to a physical Ethernet interface on a node, which may carry various types of traffic (e.g., storage, management, or VM traffic). Exposing eth0 directly to users would compromise security by allowing access to internal system communications.

- **OOB (Out-of-Band)**: This refers to out-of-band management interfaces, such as IPMI or iLO, used for hardware management. These are strictly for administrative purposes and must remain isolated from user access to prevent unauthorized control of the infrastructure.

The NUSA course documentation specifically notes that “Nutanix Objects network hardening requires exposing only the S3 endpoint to external users, typically through a load-balanced VIP, while ensuring all other interfaces, such as management or internal cluster networks, are isolated.” This is achieved by configuring network segmentation, firewalls, and access control lists (ACLs) to restrict traffic to the S3 endpoint.

Question:

What is the most likely cause no Prism Element clusters are listed when trying to create a new object store?

The administrator did not manually sync Prism Element to Prism Central after registration.

Prism Element cluster CVMs must be restarted after registration.

Although Prism Element is registered, object stores cannot be added via Prism Central.

Prism Element has not yet completed synchronization with Prism Central.

When creating a new Nutanix Objects instance viaPrism Central, Prism Central must havecompleted synchronizationwith the Prism Element cluster(s). This synchronization ensures that:

Cluster details and resources(such as available storage, network configurations, and capacity) are properly displayed in Prism Central.

Prism Central can create and manage Object Storesbased on accurate data from the registered cluster.

From the NUSA deployment module:

“After registering Prism Element clusters in Prism Central, there is an initial synchronization process that must complete before clusters appear in workflows such as object store creation.”

The other options:

Manual syncis not required; Prism Central automatically synchronizes after registration.

CVM restartsare not part of the normal registration or synchronization process.

Object stores can indeed be added via Prism Centralonce the sync is complete.

Therefore, the administrator shouldwait for Prism Central and Prism Element to finish synchronizingbefore proceeding.

An administrator has been asked to upgrade an S3-compatible bucket on-premise using Prism Central. Prior to running the LCM upgrade, the administrator validated the following configurations for this task:

Inbound traffic allowlist:

Controller Virtual Machine (CVM) IP addresses (including secondary/segmented)

Prism Central IP address

Pod network range, usually 10.100.0.0/16

All IP addresses, such as 0.0.0.0/0 for ports TCP 80 and 443

Outbound traffic allowlist:

CVM IP addresses (including secondary/segmented)

Prism Central IP address

Pod network range, usually 10.100.0.0/16

All IP addresses such as 0.0.0.0/0 for ports TCP 53, 7100, 5553 and UDP 53, 123

After the validation, the LCM upgrade is launched but did not complete. After reaching the security team, the administrator is informed that security has been enforced on the outbound traffic to the Internet as well. The administrator has been asked to provide a list of components that require Internet access. What should the administrator provide to the security team for a successful upgrade?

Pod network

Prism Central IP address/port

LCM

CVM IP address/port

The scenario involves upgrading an S3-compatible bucket (Nutanix Objects) using Prism Central’s Lifecycle Manager (LCM). The LCM upgrade process failed because outbound traffic to the Internet was restricted, and the administrator needs to identify which components require Internet access for a successful upgrade. The correct answer is that theCVM IP address/portmust be provided to the security team, as the Controller Virtual Machines (CVMs) are responsible for downloading upgrade artifacts from Nutanix’s external repositories during an LCM upgrade.

TheNutanix Unified Storage Administration (NUSA)course explains that “LCM upgrades require the CVMs to access external Nutanix repositories to download software and firmware updates, typically over HTTPS (port 443).” The CVMs initiate the download of upgrade packages for components like Nutanix Objects, which includes the S3-compatible bucket infrastructure. The course further notes that “outbound traffic from CVM IP addresses to the Internet must be allowed on ports such as TCP 80 (HTTP) and TCP 443 (HTTPS) for LCM to successfully retrieve upgrade files.”

TheNutanix Certified Professional - Unified Storage (NCP-US)study guide elaborates that “during an LCM upgrade, CVMs communicate with external Nutanix servers (e.g., download.nutanix.com) to fetch upgrade bundles, requiring outbound Internet access from the CVM IP addresses on TCP ports 80 and 443.” Additionally, DNS resolution (TCP/UDP port 53) is needed to resolve the external repository URLs, and NTP (UDP port 123) ensures time synchronization, both of which are already allowed in the outbound traffic configuration provided. However, the failure suggests that the specific outbound traffic from CVMs to the Internet on port 443 (or 80) was blocked, preventing the download of the Nutanix Objects upgrade package.

The other options are incorrect:

Pod network: The pod network (e.g., 10.100.0.0/16) is typically an internal network range for containerized workloads and does not require Internet access for LCM upgrades.

Prism Central IP address/port: While Prism Central orchestrates the LCM upgrade, it is the CVMs that perform the actual download of upgrade artifacts from the Internet. Prism Central communicates with CVMs internally and does not directly access the Internet for this task.

LCM: LCM is a software component, not a network entity with an IP address or port. It runs on Prism Central and relies on CVMs to fetch the necessary files.

To resolve the issue, the administrator should provide the security team with the CVM IP addresses and ensure that outbound traffic to the Internet is allowed on TCP port 443 (HTTPS) and optionally TCP port 80 (HTTP) for accessing Nutanix’s external repositories (e.g., download.nutanix.com). The NUSA course documentation emphasizes that “ensuring CVMs have Internet access on the required ports is critical for LCM upgrades to complete successfully, especially for components like Nutanix Objects.”

Which term describes Nutanix Files blocking access to a file until its file state is manually changed?

Unquarantined

Quarantined

Cleaned

Deleted

In Nutanix Files, there is a built-in feature calledFile Quarantine. When certain suspicious or malicious activity is detected—often through integrations with file scanning tools or security alerts—the file isquarantined. In a quarantined state, access to the file isblockeduntil an administrator manually reviews and decides to eitherunquarantineordeletethe file.

The NCP-US and NUSA courses highlight this term as follows:

“Files that are detected to have potential issues or threats are placed in a quarantined state by Nutanix Files. This quarantined state restricts user access to ensure security and requires manual administrative action to restore access.”

Thus, the correct term isQuarantined.

Question:

An administrator needs to move infrequently accessed data to lower-cost storage based on file type and owner, and automatically recall data if data access frequency has increased.

What should administrator do to satisfy these requirements?

Configure Advanced tiering in Data Lens.

Create a Lifecycle Rule in Objects Buckets tab.

Create an SSR-enabled share in Files.

Configure Smart tiering in Files.

Smart Tieringin Nutanix Files is a built-in feature that allows administrators toautomatically move infrequently accessed data(cold data) tolower-cost storage tiers(like NFS or S3-compatible storage). It also supportsautomatically recalling dataif it becomes hot (frequently accessed) again.

According to NUSA course details:

“Smart Tiering policies in Nutanix Files allow administrators to define rules based on file metadata (type, size, owner) and last access time. Cold data is tiered off to cheaper storage, and Files can recall the data if needed, ensuring transparent access for users.”

Key reasons why Smart Tiering is the solution:

Automatically identifies cold data(based on access patterns).

Moves cold data to external or cheaper storagetransparently.

Re-hydrates dataautomatically if it becomes hot again, maintaining performance and user experience.

The other options:

Advanced tiering in Data Lens— Data Lens is for analytics and reporting, not for moving data.

Lifecycle Rules in Objects— manages data lifecycle for object buckets, not Files shares.

SSR (Self-Service Restore)— is for file recovery, not data tiering.

Thus, the administrator shouldconfigure Smart Tiering in Nutanix Filesto satisfy the requirement.

An administrator is tasked with migrating physical SQL workloads from a legacy SAN platform to a newly-deployed Nutanix environment. The current physical hosts boot from SAN. The Nutanix environment has plenty of storage resources available. Which action can the administrator take to complete this task?

Boot over iSCSI using Nutanix Volumes

Boot using PXE protocol with Nutanix Files

Boot using the NFS protocol with Nutanix Files

Boot using Nutanix Object stores

To migrate physical SQL workloads from a legacy SAN platform where hosts boot from SAN to a Nutanix environment, the administrator can useNutanix Volumesto enable booting overiSCSI. Nutanix Volumes is a block storage solution that provides iSCSI-based storage, allowing external hosts (such as physical servers) to access Nutanix storage as block devices. This is ideal for replacing SAN-based boot volumes, as it supports the same iSCSI protocol used in traditional SAN environments.

According to theNutanix Unified Storage Administration (NUSA)course, Nutanix Volumes enables external hosts to connect to Nutanix storage via iSCSI, which can be used for boot volumes or data volumes. The course emphasizes that “Nutanix Volumes supports iSCSI boot for physical servers, making it a suitable solution for migrating workloads from legacy SAN environments to Nutanix.” This allows the administrator to configure the physical SQL servers to boot from iSCSI targets provisioned on Nutanix Volumes, leveraging the Nutanix cluster’s storage resources.

TheNutanix Certified Professional - Unified Storage (NCP-US)study guide further details that Nutanix Volumes can be configured to present iSCSI LUNs to physical hosts, which can be used for both operating system boot and application data storage. For the SQL workloads, the administrator can create iSCSI targets on Nutanix Volumes, configure the physical hosts’ iSCSI initiators to connect to these targets, and migrate the boot and data volumes from the legacy SAN to Nutanix.

The other options are not suitable for this task:

Boot using PXE protocol with Nutanix Files: Nutanix Files is a file storage solution that supports SMB and NFS protocols, not PXE (Preboot Execution Environment) booting. PXE is typically used for network-based OS installation, not for booting SQL workloads or replacing SAN-based boot volumes.

Boot using the NFS protocol with Nutanix Files: Nutanix Files supports NFS for file sharing, but NFS is not designed for booting physical servers. It is used for file-level access, not block-level access required for boot volumes.

Boot using Nutanix Object stores: Nutanix Objects is an object storage solution designed for S3-compatible APIs, not for block or file-based booting. It is unsuitable for hosting bootable volumes or SQL workloads.

The NUSA course documentation highlights that “Nutanix Volumes provides a seamless migration path for SAN-based workloads, including boot-from-SAN configurations, by leveraging iSCSI to present storage to physical hosts.” This makes it the only viable option for the described migration task.

An administrator has been tasked with troubleshooting a storage performance problem for a large database VM with the following configuration:

16 vCPU

64 GB RAM

One 50 GB native AHV virtual disk hosting the guest OS

Six 500 GB virtual disks containing database files connecting via iSCSI to a Nutanix volume group

One NIC for client connectivity

One NIC for iSCSI connectivity

In the course of investigating the problem, the administrator determines that the issue is isolated to large block-size I/O operations. What step should the administrator take to improve performance for the VM?

Add an additional NIC for iSCSI connectivity and enable MPIO

Add additional virtual disks to the volume group

Increase the iSCSI adapter maximum transfer length

Locate the iSCSI NIC on the same VLAN as the cluster DSIP

The performance issue for the database VM is related to large block-size I/O operations over iSCSI, which connects to a Nutanix volume group. The VM has a dedicated NIC for iSCSI traffic, but a single NIC can become a bottleneck for large I/O operations, especially for a high-performance workload like a database. To improve performance, the administrator shouldadd an additional NIC for iSCSI connectivity and enable MPIO (Multipath I/O). This approach allows the VM to use multiple network paths for iSCSI traffic, increasing throughput and reducing latency for large block-size I/O operations.

TheNutanix Unified Storage Administration (NUSA)course states, “For high-performance workloads using Nutanix Volumes over iSCSI, enabling MPIO with multiple NICs on the VM can significantly improve I/O performance, especially for large block-size operations.” MPIO allows the VM to establish multiple iSCSI sessions to the Nutanix volume group, distributing I/O traffic across the available NICs and Controller Virtual Machines (CVMs) in the cluster. This is particularly effective for database workloads, which often involve large sequential I/O operations.

TheNutanix Certified Professional - Unified Storage (NCP-US)study guide further elaborates that “adding a second NIC for iSCSI traffic and configuring MPIO ensures load balancing and failover for iSCSI sessions, optimizing performance for VMs with high I/O demands, such as databases.” By adding another NIC, the VM can establish additional iSCSI paths to the volume group’s iSCSI Data Services IP (DSIP), leveraging the cluster’s distributed architecture to handle large block-size I/O more efficiently.

The other options are incorrect:

Add additional virtual disks to the volume group: Adding more virtual disks does not address the network bottleneck caused by a single iSCSI NIC and may not improve performance for large block-size I/O operations.

Increase the iSCSI adapter maximum transfer length: Adjusting the maximum transfer length (MTU) might help with network efficiency, but it does not address the fundamental issue of a single NIC being a bottleneck for large I/O operations. MPIO with multiple NICs is a more effective solution.

Locate the iSCSI NIC on the same VLAN as the cluster DSIP: While placing the iSCSI NIC on the same VLAN as the DSIP can reduce latency by avoiding inter-VLAN routing, the primary issue here is the single NIC bottleneck, not VLAN configuration. MPIO with multiple NICs provides a better performance improvement.

The NUSA course documentation emphasizes that “for VMs with large block-size I/O requirements, such as databases, using MPIO with multiple iSCSI NICs ensures optimal performance by distributing traffic across multiple paths to the Nutanix volume group.”

Question:

An administrator is deployingFile Analytics. The following subnets are available:

CVM subnet: 10.1.1.0/24

AHV subnet: 10.1.2.0/24

Nutanix Files client network: 10.1.3.0/24

Nutanix Files storage network: 10.1.4.0/24

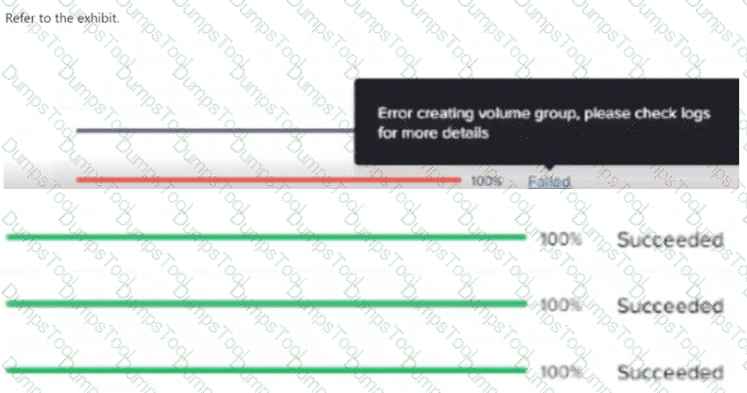

The administrator has reserved10.1.4.100as the File Analytics IP. However, the deploymentfailswith the error shown:

“Error creating volume group, please check logs for more details.”

What action must the administrator take to successfully deploy File Analytics?

Allow port 445 in the firewall.

Re-deploy File Analytics on the Files storage network.

Re-deploy File Analytics on the Files client network.

Allow port 139 in the firewall.

According to the NUSA course materials,File Analyticsis designed to be deployed on thesame networkas the Nutanix Filesclient networkbecause:

File Analyticsaccesses file share metadata and analytics datathrough the same SMB/NFS protocolsused by clients accessing the shares.

Using theclient networkensures that File Analytics canconnect to the SMB/NFS endpoints, collect activity logs, and provide visibility without traversing storage-only traffic.

Using thestorage network(as was done with IP 10.1.4.100 in this case) leads to deployment errors because:

“The storage network in Nutanix Files is used exclusively for data replication and cluster-level operations—not for client or analytics traffic. Using this network for File Analytics deployment causes communication failures.”

Thus, the administrator mustredeploy File Analytics on the Files client network (10.1.3.0/24), ensuring proper access and connectivity.

The firewall port configuration (ports 445/139) is relevant for SMB traffic butnotthe root cause of the deployment error in this case.

What is a requirement for Smart DR?

Primary and recovery file servers must have different domain names.

File servers may have different FSVM numbers at the primary and recovery sites.

Primary and recovery file servers must support the same protocols.

The Files Manager must have only one file server.

A requirement forSmart DR(Disaster Recovery) in Nutanix Files is thatprimary and recovery file servers must support the same protocols. Smart DR is a feature that enables automated disaster recovery for Nutanix Files by replicating file shares between a primary site and a recovery site, ensuring business continuity in case of a failure.

According to theNutanix Unified Storage Administration (NUSA)course, “Smart DR requires that the primary and recovery file servers support the same file-sharing protocols (e.g., SMB, NFS) to ensure seamless failover and consistent client access.” This ensures that clients can access the same shares with the same protocol after a failover, maintaining application compatibility and user experience.

TheNutanix Certified Professional - Unified Storage (NCP-US)study guide further states that “Smart DR configurations mandate that the primary and recovery file servers are configured with identical protocol support to enable consistent replication and recovery of file shares.” For example, if the primary file server uses SMB for Windows clients, the recovery file server must also support SMB.

The other options are incorrect:

Primary and recovery file servers must have different domain names: Smart DR does not require different domain names. In fact, using the same domain name can simplify AD integration and client access during failover.

File servers may have different FSVM numbers at the primary and recovery sites: While Smart DR allows flexibility in FSVM counts, it is not a requirement. The number of FSVMs can be the same or different based on site resources, but this is not mandated.

The Files Manager must have only one file server: Nutanix Files Manager can manage multiple file servers, and Smart DR does not restrict the environment to a single file server.

The NUSA course documentation highlights that “Smart DR ensures protocol consistency between primary and recovery sites to support seamless failover, making protocol support a critical requirement for configuration.”

Which Nutanix Objects metric provides the total input requests per second of a bucket?

Puts

Throughput

Gets

NFS Reads

In Nutanix Objects metrics:

Puts: Measures PUT requests per second (object uploads), representing input operations.

Gets (Option C): Measures output (download) requests.

Throughput (Option B): Reports bandwidth (MB/s), not request rate.

NFS Reads (Option D): Specific to NFS access, not general bucket input.

What is the maximum number of object stores that can be deployed per AOS cluster?

4

8

16

32

A single AOS cluster supports a maximum of 4 Nutanix Object Stores. Each object store is an independent instance with dedicated resources.

An administrator wants to control the user visibility of SMB folders and files based on user permissions.

What feature should the administrator choose to accomplish this?

Access Based-Enumeration (ABE)

File Analytics

Files blocking

Role Based Access Control (RBAC)

Access Based-Enumeration (ABE)is a feature in Nutanix Files that controls whether users cansee folders and filesfor which they do not have access permissions. When ABE is enabled:

Users will only see the folders/files they are authorized to access.

Items for which they have no permissions will be hidden from view.

The NUSA course describes this feature:

“Access Based-Enumeration (ABE) ensures that users browsing a share will only see folders and files that they have permission to access, improving security and minimizing confusion.”

Thus,ABEis the precise feature for controllinguser visibilityof SMB shares based on permissions.

After configuring Smart DR, an administrator observes that a policy in the Policies tab is not visible within Prism Central (PC).

What is the likely cause of this issue?

The share permissions include more than one local user.

The initial replication has not completed.

The administrator is logged into PC with a local account rather than an AD account.

Port 7515 is not opened between the source and recovery networks.

Smart DR requires port 7515 (TCP) for communication between source/target clusters and Prism Central. If blocked:

Policies fail to synchronize with PC.

Policies become "invisible" in the UI.

Other options are unrelated:

A: Share permissions don’t affect policy visibility.

B: Initial replication progress appears in UI even if incomplete.

C: AD/local login affects permissions, not policy discovery.

An administrator needs to recover a previous version of a file or share.

Which Nutanix Files feature should the administrator enable to allow this?

Snapshot

Data Protection

Self-Service Restore

Protection Policies

TheSelf-Service Restore (SSR)feature in Nutanix Files is designed to empower users and administrators to recover previous versions of files or entire shares. This feature leverages the snapshot capabilities of Nutanix Files to present earlier file versions directly to users for easy, self-directed recovery.

The NUSA and NCP-US materials highlight this:

“Self-Service Restore allows end users or administrators to restore individual files or folders from snapshots. This minimizes administrator intervention and improves recovery times for file-level incidents.”

While snapshots and protection policies underlie the mechanism,Self-Service Restoreis thespecific featurethat enables user-initiated recovery of earlier versions.

Question:

What should be enabled for Windows clients when using the SMB protocol in a Nutanix Files deployment?

Zettabyte File System

Internet Information Services

Distributed File System

Automatic Windows Update

SMB (Server Message Block)protocol is the foundation for file sharing in Windows environments. In a Nutanix Files deployment, enablingDistributed File System (DFS)on Windows clients enhances SMB functionality by:

Allowingnamespace-based accessto shares.

Providingclient failover and load balancingwhen used with Nutanix Files SMB shares.

According to the NUSA course:

“For Windows clients accessing Nutanix Files via SMB, enabling Distributed File System (DFS) ensures they can dynamically discover and connect to the most optimal FSVM, even during failovers. DFS enhances resiliency and performance by maintaining a consistent namespace.”

The other options:

Zettabyte File System— not relevant for Windows or SMB.

Internet Information Services— web server technology, not related to SMB shares.

Automatic Windows Update— not directly tied to SMB access.

Thus,enabling Distributed File System (DFS)on Windows clients ensures smooth SMB integration and high availability.

Question:

An administrator has been advised to access the Nutanix Files Server VM and ensure that passwords meet the organization's security policy for server access.

Which password complexity requirement does Nutanix Files support?

At least 2 lowercase letters

At least 8 characters difference

At least 8 characters long

At least 2 uppercase letters

When accessing Nutanix Files FSVMs (File Server VMs), Nutanix enforcespassword complexity policiesto meet common security standards. According to the NCP-US and NUSA materials, the defaultminimum requirementfor password complexity is:

“Passwords must be at least 8 characters long, supporting uppercase letters, lowercase letters, numbers, and special characters. However, the absolute minimum is an 8-character length.”

This ensures that even if the environment does not enforce uppercase/lowercase mixes or special character usage, theabsolute minimum lengthof8 charactersmust be met. This requirement ensures protection against basic dictionary attacks.

The other options (specific numbers of uppercase or lowercase letters, or a difference of 8 characters from previous passwords) arenot specifically requiredby Nutanix Files out-of-the-box.

An administrator has configured a volume-group with four vDisks and needs them to be load-balanced across multiple CVMs. The volume-group will be directly connected to the VM. Which task must the administrator perform to meet this requirement?

Enable load-balancing for the volume-group using ncli

Select multiple initiator IQNs when creating the volume-group

Select multiple iSCSI adapters within the VM

Enable load-balancing for the volume-group using acli

To load-balance a volume-group with four vDisks across multiple Controller Virtual Machines (CVMs) for a VM using Nutanix Volumes, the administrator mustenable load-balancing for the volume-group using acli. Nutanix Volumes supports iSCSI-based block storage, and load-balancing ensures that I/O traffic from the VM is distributed across multiple CVMs, improving performance and scalability. The acli (AHV Command-Line Interface) is the tool used to configure this setting for volume-groups.

TheNutanix Unified Storage Administration (NUSA)course states, “Nutanix Volumes supports load-balancing of iSCSI traffic across CVMs, which can be enabled for a volume-group using the acli command to ensure optimal performance for VMs.” The specific command in acli allows the administrator to enable load-balancing, distributing the iSCSI sessions for the volume-group’s vDisks across the available CVMs in the cluster. This ensures that the VM’s I/O requests are handled by multiple CVMs, preventing any single CVM from becoming a bottleneck.

TheNutanix Certified Professional - Unified Storage (NCP-US)study guide further elaborates that “to enable load-balancing for a volume-group, the administrator can use the acli vg.update command with the enable_load_balancing=true option, ensuring that iSCSI traffic is distributed across CVMs for better performance.” This is particularly important for volume-groups with multiple vDisks, as in this case with four vDisks, to optimize I/O distribution.

The other options are incorrect:

Enable load-balancing for the volume-group using ncli: The ncli (Nutanix Command-Line Interface) is used for cluster-wide configurations, but load-balancing for volume-groups is specifically managed via acli, which is tailored for AHV and volume-group operations.

Select multiple initiator IQNs when creating the volume-group: Initiator IQNs (iSCSI Qualified Names) are used to authenticate and connect initiators to the volume-group, but selecting multiple IQNs does not enable load-balancing across CVMs.

Select multiple iSCSI adapters within the VM: Configuring multiple iSCSI adapters in the VM is a client-side configuration that can help with multipathing, but it does not control load-balancing across CVMs, which is a cluster-side setting.

The NUSA course documentation highlights that “enabling load-balancing via acli for a volume-group ensures that iSCSI traffic is distributed across multiple CVMs, optimizing performance for VMs with direct-attached volumes.”

An administrator is concerned that storage in the Nutanix File Server is being used to store personal photos and videos. How can the administrator determine if this is the case?

Examine the Usage Summary table for the File Server Container in the Prism Element Storage page.

Examine the File Activity widget in the File Analytics dashboard for the File Server.

Examine the File Distribution by Type widget from the Files Console for the File Server.

Examine the File Distribution by Type widget in the File Analytics dashboard for the File Server.

To determine if the Nutanix File Server is being used to store personal photos and videos, the administrator shouldexamine the File Distribution by Type widget in the File Analytics dashboard for the File Server. Nutanix File Analytics is a monitoring and analytics tool that provides detailed insights into file share activities, including the types of files stored on the file server. The File Distribution by Type widget specifically categorizes files by their extensions (e.g., .jpg, .mp4), allowing the administrator to identify whether image or video files are present.

TheNutanix Unified Storage Administration (NUSA)course states, “The File Analytics dashboard includes the File Distribution by Type widget, which displays the breakdown of file types stored on the Nutanix File Server, enabling administrators to identify specific file categories such as images or videos.” This widget provides a visual representation of file types, making it easy to detect if personal photos (e.g., .jpg, .png) or videos (e.g., .mp4, .avi) are being stored.

TheNutanix Certified Professional - Unified Storage (NCP-US)study guide further elaborates that “File Analytics offers granular visibility into file storage patterns through widgets like File Distribution by Type, which is ideal for identifying unauthorized or non-business-related content, such as personal media files.” By accessing this widget in the File Analytics dashboard, the administrator can confirm the presence of photo and video files and take appropriate action, such as setting policies to restrict such content.

The other options are incorrect or insufficient:

Examine the Usage Summary table for the File Server Container in the Prism Element Storage page: The Usage Summary table in Prism Element provides high-level storage metrics (e.g., capacity usage) but does not break down data by file type, so it cannot identify photos or videos.

Examine the File Activity widget in the File Analytics dashboard for the File Server: The File Activity widget shows file access patterns (e.g., read/write operations) but does not provide details about file types, making it unsuitable for this purpose.

Examine the File Distribution by Type widget from the Files Console for the File Server: The Nutanix Files Console is used for managing file servers and shares, but it does not include a File Distribution by Type widget. This widget is specific to the File Analytics dashboard.

The NUSA course documentation highlights that “the File Distribution by Type widget in File Analytics is a key tool for auditing file content, allowing administrators to detect and manage non-compliant or personal files, such as photos and videos, stored on the file server.”

An administrator is trying to add a Nutanix Volume Group (VG) over iSCSI for storage to a Windows VM, but receives the error as shown in the exhibit.

What is a likely reason for this error?

The Windows login authentication is incorrect.

The Windows IP address is not in the whitelist.

The CHAP authentication configured is incorrect.

There is already a VG connected on that client.

Comprehensive and Detailed Explanation from Nutanix Unified Storage (NCP-US) and the Nutanix Unified Storage Administration (NUSA) course documents:

In the Nutanix environment,Volume Groups (VGs)are used to present block storage to guest operating systems via iSCSI targets. These VGs are managed through Prism and can be configured with security features such asCHAP (Challenge-Handshake Authentication Protocol)to ensure secure connections.

Here’s the detailed breakdown:

Authentication Failure Context:The error message shown in the exhibit—“Authentication Failure”—occurs during the iSCSI target logon phase when the initiator (in this case, the Windows VM) attempts to authenticate to the Nutanix VG target. Nutanix Volume Groups can be configured to require CHAP authentication. If the iSCSI initiator’s CHAP username and secret do not match the target’s configuration, authentication will fail, and the target will reject the login attempt.

Why CHAP is the likely cause:The exhibit clearly shows the authentication failure occurring at theLog On to Targetstep of the iSCSI Initiator Properties. In the NCP-US and NUSA course materials, CHAP authentication is specifically covered as a method to secure iSCSI sessions, and it is the most common cause for anauthentication errorat this stage:

“If CHAP authentication is enabled on the target, the initiator must provide the correct CHAP username and secret. Failure to do so results in an authentication error during the login phase.”

Eliminating other options:

Windows login authentication:This is not related to iSCSI target login. Windows login credentials are separate from iSCSI CHAP authentication.

IP address whitelisting:While Nutanix allows whitelisting of initiator IPs for security, a misconfigured whitelist would typically result in aconnection refusalerror, not anauthentication failureerror.

Already connected VG:Having a VG already connected would result in aresource in useorconnection refusedmessage, not an authentication failure.

Additional Course Details:The NUSA course materials emphasize that CHAP can be configured for Nutanix Volume Groups either at creation or by modifying the VG’s settings. It’s important to ensure that the Windows iSCSI initiator has matching CHAP credentials configured under theAdvancedbutton in the iSCSI Initiator Properties.

Best Practice Reminder:When configuring Volume Groups, the recommended approach is to document the CHAP credentials and validate them in the iSCSI initiator settings to prevent this type of error.

In conclusion, theauthentication failureseen in the exhibit is directly related toCHAP authentication misconfigurationon either the Nutanix VG target or the Windows iSCSI initiator. Verifying and synchronizing the CHAP username and secret will resolve the issue.

TESTED 22 Feb 2026

Copyright © 2014-2026 DumpsTool. All Rights Reserved