Which Splunk cluster feature requires additional indexer storage?

Search Head Clustering

Indexer Discovery

Indexer Acknowledgement

Index Summarization

Comprehensive and Detailed Explanation (From Splunk Enterprise Documentation)Splunk’s documentation on summary indexing and data-model acceleration clarifies that summary data is stored as additional indexed data on the indexers. Summary indexing produces new events—aggregations, rollups, scheduled search outputs—and stores them in summary indexes. Splunk explains that these summaries accumulate over time and require additional bucket storage, retention considerations, and sizing adjustments.

The documentation for accelerated data models further confirms that acceleration summaries are stored alongside raw data on indexers, increasing disk usage proportional to the acceleration workload. This makes summary indexing the only listed feature that strictly increases indexer storage demand.

In contrast, Search Head Clustering replicates configuration and knowledge objects across search heads—not on indexers. Indexer Discovery affects forwarder behavior, not storage. Indexer Acknowledgement controls data-delivery guarantees but does not create extra indexed content.

Therefore, only Index Summarization (summary indexing) directly increases indexer storage requirements.

Which of the following should be done when installing Enterprise Security on a Search Head Cluster? (Select all that apply.)

Install Enterprise Security on the deployer.

Install Enterprise Security on a staging instance.

Copy the Enterprise Security configurations to the deployer.

Use the deployer to deploy Enterprise Security to the cluster members.

When installing Enterprise Security on a Search Head Cluster (SHC), the following steps should be done: Install Enterprise Security on the deployer, and use the deployer to deploy Enterprise Security to the cluster members. Enterprise Security is a premium app that provides security analytics and monitoring capabilities for Splunk. Enterprise Security can be installed on a SHC by using the deployer, which is a standalone instance that distributes apps and other configurations to the SHC members. Enterprise Security should be installed on the deployer first, and then deployed to the cluster members using the splunk apply shcluster-bundle command. Enterprise Security should not be installed on a staging instance, because a staging instance is not part of the SHC deployment process. Enterprise Security configurations should not be copied to the deployer, because they are already included in the Enterprise Security app package.

Splunk Enterprise platform instrumentation refers to data that the Splunk Enterprise deployment logs in the _introspection index. Which of the following logs are included in this index? (Select all that apply.)

audit.log

metrics.log

disk_objects.log

resource_usage.log

The following logs are included in the _introspection index, which contains data that the Splunk Enterprise deployment logs for platform instrumentation:

disk_objects.log. This log contains information about the disk objects that Splunk creates and manages, such as buckets, indexes, and files. This log can help monitor the disk space usage and the bucket lifecycle.

resource_usage.log. This log contains information about the resource usage of Splunk processes, such as CPU, memory, disk, and network. This log can help monitor the Splunk performance and identify any resource bottlenecks. The following logs are not included in the _introspection index, but rather in the _internal index, which contains data that Splunk generates for internal logging:

audit.log. This log contains information about the audit events that Splunk records, such as user actions, configuration changes, and search activity. This log can help audit the Splunk operations and security.

metrics.log. This log contains information about the performance metrics that Splunk collects, such as data throughput, data latency, search concurrency, and search duration. This log can help measure the Splunk performance and efficiency. For more information, see About Splunk Enterprise logging and [About the _introspection index] in the Splunk documentation.

When designing the number and size of indexes, which of the following considerations should be applied?

Expected daily ingest volume, access controls, number of concurrent users

Number of installed apps, expected daily ingest volume, data retention time policies

Data retention time policies, number of installed apps, access controls

Expected daily ingest volumes, data retention time policies, access controls

When designing the number and size of indexes, the following considerations should be applied:

Expected daily ingest volumes: This is the amount of data that will be ingested and indexed by the Splunk platform per day. This affects the storage capacity, the indexing performance, and the license usage of the Splunk deployment. The number and size of indexes should be planned according to the expected daily ingest volumes, as well as the peak ingest volumes, to ensure that the Splunk deployment can handle the data load and meet the business requirements12.

Data retention time policies: This is the duration for which the data will be stored and searchable by the Splunk platform. This affects the storage capacity, the data availability, and the data compliance of the Splunk deployment. The number and size of indexes should be planned according to the data retention time policies, as well as the data lifecycle, to ensure that the Splunk deployment can retain the data for the desired period and meet the legal or regulatory obligations13.

Access controls: This is the mechanism for granting or restricting access to the data by the Splunk users or roles. This affects the data security, the data privacy, and the data governance of the Splunk deployment. The number and size of indexes should be planned according to the access controls, as well as the data sensitivity, to ensure that the Splunk deployment can protect the data from unauthorized or inappropriate access and meet the ethical or organizational standards14.

Option D is the correct answer because it reflects the most relevant and important considerations for designing the number and size of indexes. Option A is incorrect because the number of concurrent users is not a direct factor for designing the number and size of indexes, but rather a factor for designing the search head capacity and the search head clustering configuration5. Option B is incorrect because the number of installed apps is not a direct factor for designing the number and size of indexes, but rather a factor for designing the app compatibility and the app performance. Option C is incorrect because it omits the expected daily ingest volumes, which is a crucial factor for designing the number and size of indexes.

Which of the following Splunk deployments has the recommended minimum components for a high-availability search head cluster?

2 search heads, 1 deployer, 2 indexers

3 search heads, 1 deployer, 3 indexers

1 search head, 1 deployer, 3 indexers

2 search heads, 1 deployer, 3 indexers

The correct Splunk deployment to have the recommended minimum components for a high-availability search head cluster is 3 search heads, 1 deployer, 3 indexers. This configuration ensures that the search head cluster has at least three members, which is the minimum number required for a quorum and failover1. The deployer is a separate instance that manages the configuration updates for the search head cluster2. The indexers are the nodes that store and index the data, and having at least three of them provides redundancy and load balancing3. The other options are not recommended, as they either have less than three search heads or less than three indexers, which reduces the availability and reliability of the cluster. Therefore, option B is the correct answer, and options A, C, and D are incorrect.

1: About search head clusters 2: Use the deployer to distribute apps and configuration updates 3: About indexer clusters and index replication

(How can a Splunk admin control the logging level for a specific search to get further debug information?)

Configure infocsv_log_level = DEBUG in limits.conf.

Insert | noop log_debug=* after the base search.

Open the Search Job Inspector in Splunk Web and modify the log level.

Use Settings > Server settings > Server logging in Splunk Web.

Splunk Enterprise allows administrators to dynamically increase logging verbosity for a specific search by adding a | noop log_debug=* command immediately after the base search. This method provides temporary, search-specific debug logging without requiring global configuration changes or restarts.

The noop (no operation) command passes all results through unchanged but can trigger internal logging actions. When paired with the log_debug=* argument, it instructs Splunk to record detailed debug-level log messages for that specific search execution in search.log and the relevant internal logs.

This approach is officially documented for troubleshooting complex search issues such as:

Unexpected search behavior or slow performance.

Field extraction or command evaluation errors.

Debugging custom search commands or macros.

Using this method is safer and more efficient than modifying server-wide logging configurations (server.conf or limits.conf), which can affect all users and increase log noise. The “Server logging” page in Splunk Web (Option D) adjusts global logging levels, not per-search debugging.

References (Splunk Enterprise Documentation):

• Search Debugging Techniques and the noop Command

• Understanding search.log and Per-Search Logging Control

• Splunk Search Job Inspector and Debugging Workflow

• Troubleshooting SPL Performance and Field Extraction Issues

(If the maxDataSize attribute is set to auto_high_volume in indexes.conf on a 64-bit operating system, what is the maximum hot bucket size?)

4 GB

750 MB

10 GB

1 GB

According to the indexes.conf reference in Splunk Enterprise, the parameter maxDataSize controls the maximum size (in GB or MB) of a single hot bucket before Splunk rolls it to a warm bucket. When the value is set to auto_high_volume on a 64-bit system, Splunk automatically sets the maximum hot bucket size to 10 GB.

The “auto” settings allow Splunk to choose optimized values based on the system architecture:

auto: Default hot bucket size of 750 MB (32-bit) or 10 GB (64-bit).

auto_high_volume: Specifically tuned for high-ingest indexes; on 64-bit systems, this equals 10 GB per hot bucket.

auto_low_volume: Uses smaller bucket sizes for lightweight indexes.

The purpose of larger hot bucket sizes on 64-bit systems is to improve indexing performance and reduce the overhead of frequent bucket rolling during heavy data ingestion. The documentation explicitly warns that these sizes differ on 32-bit systems due to memory addressing limitations.

Thus, for high-throughput environments running 64-bit operating systems, auto_high_volume = 10 GB is the correct and Splunk-documented configuration.

References (Splunk Enterprise Documentation):

• indexes.conf – maxDataSize Attribute Reference

• Managing Index Buckets and Data Retention

• Splunk Enterprise Admin Manual – Indexer Storage Configuration

• Splunk Performance Tuning: Bucket Management and Hot/Warm Transitions

Which server.conf attribute should be added to the master node's server.conf file when decommissioning a site in an indexer cluster?

site_mappings

available_sites

site_search_factor

site_replication_factor

The site_mappings attribute should be added to the master node’s server.conf file when decommissioning a site in an indexer cluster. The site_mappings attribute is used to specify how the master node should reassign the buckets from the decommissioned site to the remaining sites. The site_mappings attribute is a comma-separated list of site pairs, where the first site is the decommissioned site and the second site is the destination site. For example, site_mappings = site1:site2,site3:site4 means that the buckets from site1 will be moved to site2, and the buckets from site3 will be moved to site4. The available_sites attribute is used to specify which sites are currently available in the cluster, and it is automatically updated by the master node. The site_search_factor and site_replication_factor attributes are used to specify the number of searchable and replicated copies of each bucket for each site, and they are not affected by the decommissioning process

Which of the following strongly impacts storage sizing requirements for Enterprise Security?

The number of scheduled (correlation) searches.

The number of Splunk users configured.

The number of source types used in the environment.

The number of Data Models accelerated.

Data Model acceleration is a feature that enables faster searches over large data sets by summarizing the raw data into a more efficient format. Data Model acceleration consumes additional disk space, as it stores both the raw data and the summarized data. The amount of disk space required depends on the size and complexity of the Data Model, the retention period of the summarized data, and the compression ratio of the data. According to the Splunk Enterprise Security Planning and Installation Manual, Data Model acceleration is one of the factors that strongly impacts storage sizing requirements for Enterprise Security. The other factors are the volume and type of data sources, the retention policy of the data, and the replication factor and search factor of the index cluster. The number of scheduled (correlation) searches, the number of Splunk users configured, and the number of source types used in the environment are not directly related to storage sizing requirements for Enterprise Security1

1: https://docs.splunk.com/Documentation/ES/6.6.0/Install/Plan#Storage_sizing_requirements

Which of the following is true regarding Splunk Enterprise's performance? (Select all that apply.)

Adding search peers increases the maximum size of search results.

Adding RAM to existing search heads provides additional search capacity.

Adding search peers increases the search throughput as the search load increases.

Adding search heads provides additional CPU cores to run more concurrent searches.

The following statements are true regarding Splunk Enterprise performance:

Adding search peers increases the search throughput as search load increases. This is because adding more search peers distributes the search workload across more indexers, which reduces the load on each indexer and improves the search speed and concurrency.

Adding search heads provides additional CPU cores to run more concurrent searches. This is because adding more search heads increases the number of search processes that can run in parallel, which improves the search performance and scalability. The following statements are false regarding Splunk Enterprise performance:

Adding search peers does not increase the maximum size of search results. The maximum size of search results is determined by the maxresultrows setting in the limits.conf file, which is independent of the number of search peers.

Adding RAM to an existing search head does not provide additional search capacity. The search capacity of a search head is determined by the number of CPU cores, not the amount of RAM. Adding RAM to a search head may improve the search performance, but not the search capacity. For more information, see Splunk Enterprise performance in the Splunk documentation.

(A new Splunk Enterprise deployment is being architected, and the customer wants to ensure that the data to be indexed is encrypted. Where should TLS be turned on in the Splunk deployment?)

Deployment server to deployment clients.

Splunk forwarders to indexers.

Indexer cluster peer nodes.

Browser to Splunk Web.

The Splunk Enterprise Security and Encryption documentation specifies that the primary mechanism for securing data in motion within a Splunk environment is to enable TLS/SSL encryption between forwarders and indexers. This ensures that log data transmitted from Universal Forwarders or Heavy Forwarders to Indexers is fully encrypted and protected from interception or tampering.

The correct configuration involves setting up signed SSL certificates on both forwarders and indexers:

On the forwarder, TLS settings are defined in outputs.conf, specifying parameters like sslCertPath, sslPassword, and sslRootCAPath.

On the indexer, TLS is enabled in inputs.conf and server.conf using the same shared CA for validation.

Splunk’s documentation explicitly states that this configuration protects data-in-transit between the collection (forwarder) and indexing (storage) tiers — which is the critical link where sensitive log data is most vulnerable.

Other communication channels (e.g., deployment server to clients or browser to Splunk Web) can also use encryption but do not secure the ingestion pipeline that handles the indexed data stream. Therefore, TLS should be implemented between Splunk forwarders and indexers.

References (Splunk Enterprise Documentation):

• Securing Data in Transit with SSL/TLS

• Configure Forwarder-to-Indexer Encryption Using SSL Certificates

• Server and Forwarder Authentication Setup Guide

• Splunk Enterprise Admin Manual – Security and Encryption Best Practices

What is the default log size for Splunk internal logs?

10MB

20 MB

25MB

30MB

Splunk internal logs are stored in the SPLUNK_HOME/var/log/splunk directory by default. The default log size for Splunk internal logs is 25 MB, which means that when a log file reaches 25 MB, Splunk rolls it to a backup file and creates a new log file. The default number of backup files is 5, which means that Splunk keeps up to 5 backup files for each log file

In the deployment planning process, when should a person identify who gets to see network data?

Deployment schedule

Topology diagramming

Data source inventory

Data policy definition

In the deployment planning process, a person should identify who gets to see network data in the data policy definition step. This step involves defining the data access policies and permissions for different users and roles in Splunk. The deployment schedule step involves defining the timeline and milestones for the deployment project. The topology diagramming step involves creating a visual representation of the Splunk architecture and components. The data source inventory step involves identifying and documenting the data sources and types that will be ingested by Splunk

As a best practice, where should the internal licensing logs be stored?

Indexing layer.

License server.

Deployment layer.

Search head layer.

As a best practice, the internal licensing logs should be stored on the license server. The license server is a Splunk instance that manages the distribution and enforcement of licenses in a Splunk deployment. The license server generates internal licensing logs that contain information about the license usage, violations, warnings, and pools. The internal licensing logs should be stored on the license server itself, because they are relevant to the license server’s role and function. Storing the internal licensing logs on the license server also simplifies the license monitoring and troubleshooting process. The internal licensing logs should not be stored on the indexing layer, the deployment layer, or the search head layer, because they are not related to the roles and functions of these layers. Storing the internal licensing logs on these layers would also increase the network traffic and disk space consumption

(A customer wishes to keep costs to a minimum, while still implementing Search Head Clustering (SHC). What are the minimum supported architecture standards?)

Three Search Heads and One SHC Deployer

Two Search Heads with the SHC Deployer being hosted on one of the Search Heads

Three Search Heads but using a Deployment Server instead of a SHC Deployer

Two Search Heads, with the SHC Deployer being on the Deployment Server

Splunk Enterprise officially requires a minimum of three search heads and one deployer for a supported Search Head Cluster (SHC) configuration. This ensures both high availability and data consistency within the cluster.

The Splunk documentation explains that a search head cluster uses RAFT-based consensus to elect a captain responsible for managing configuration replication, scheduling, and user workload distribution. The RAFT protocol requires a quorum of members to maintain consistency. In practical terms, this means a minimum of three members (search heads) to achieve fault tolerance — allowing one member to fail while maintaining operational stability.

The deployer is a separate Splunk instance responsible for distributing configuration bundles (apps, settings, and user configurations) to all members of the search head cluster. The deployer is not part of the SHC itself but is mandatory for its proper management.

Running with fewer than three search heads or replacing the deployer with a Deployment Server (as in Options B, C, or D) is unsupported and violates Splunk best practices for SHC resiliency and management.

References (Splunk Enterprise Documentation):

• Search Head Clustering Overview – Minimum Supported Architecture

• Deploy and Configure the Deployer for a Search Head Cluster

• High Availability and Fault Tolerance with RAFT in SHC

A customer has a four site indexer cluster. The customer has requirements to store five copies of searchable data, with one searchable copy of data at the origin site, and one searchable copy at the disaster recovery site (site4).

Which configuration meets these requirements?

site_replication_factor = origin:2, site4:l, total:3

site_replication_factor = origin:l, site4:l, total:5

site_search_factor = origin:2, site4:l, total:3

site search factor = origin:1, site4:l, total:5

The correct configuration to meet the customer’s requirements is site_replication_factor = origin:1, site4:1, total:5. This means that each bucket will have one copy at the origin site, one copy at the disaster recovery site (site4), and three copies at any other sites. The total number of copies will be five, as required by the customer. The site_replication_factor determines how many copies of each bucket are stored across the sites in a multisite indexer cluster1. The site_search_factor determines how many copies of each bucket are searchable across the sites in a multisite indexer cluster2. Therefore, option B is the correct answer, and options A, C, and D are incorrect.

1: Configure the site replication factor 2: Configure the site search factor

Which of the following describe migration from single-site to multisite index replication?

A master node is required at each site.

Multisite policies apply to new data only.

Single-site buckets instantly receive the multisite policies.

Multisite total values should not exceed any single-site factors.

Migration from single-site to multisite index replication only affects new data, not existing data. Multisite policies apply to new data only, meaning that data that is ingested after the migration will follow the multisite replication and search factors. Existing data, or data that was ingested before the migration, will retain the single-site policies, unless they are manually converted to multisite buckets. Single-site buckets do not instantly receive the multisite policies, nor do they automatically convert to multisite buckets. Multisite total values can exceed any single-site factors, as long as they do not exceed the number of peer nodes in the cluster. A master node is not required at each site, only one master node is needed for the entire cluster

Which of the following tasks should the architect perform when building a deployment plan? (Select all that apply.)

Use case checklist.

Install Splunk apps.

Inventory data sources.

Review network topology.

When building a deployment plan, the architect should perform the following tasks:

Use case checklist. A use case checklist is a document that lists the use cases that the deployment will support, along with the data sources, the data volume, the data retention, the data model, the dashboards, the reports, the alerts, and the roles and permissions for each use case. A use case checklist helps to define the scope and the functionality of the deployment, and to identify the dependencies and the requirements for each use case1

Inventory data sources. An inventory of data sources is a document that lists the data sources that the deployment will ingest, along with the data type, the data format, the data location, the data collection method, the data volume, the data frequency, and the data owner for each data source. An inventory of data sources helps to determine the data ingestion strategy, the data parsing and enrichment, the data storage and retention, and the data security and compliance for the deployment1

Review network topology. A review of network topology is a process that examines the network infrastructure and the network connectivity of the deployment, along with the network bandwidth, the network latency, the network security, and the network monitoring for the deployment. A review of network topology helps to optimize the network performance and reliability, and to identify the network risks and mitigations for the deployment1

Installing Splunk apps is not a task that the architect should perform when building a deployment plan, as it is a task that the administrator should perform when implementing the deployment plan. Installing Splunk apps is a technical activity that requires access to the Splunk instances and the Splunk configurations, which are not available at the planning stage

(Which of the following has no impact on search performance?)

Decreasing the phone home interval for deployment clients.

Increasing the number of indexers in the indexer tier.

Allocating compute and memory resources with Workload Management.

Increasing the number of search heads in a Search Head Cluster.

According to Splunk Enterprise Search Performance and Deployment Optimization guidelines, the phone home interval (configured for deployment clients communicating with a Deployment Server) has no impact on search performance.

The phone home mechanism controls how often deployment clients check in with the Deployment Server for configuration updates or new app bundles. This process occurs independently of the search subsystem and does not consume indexer or search head resources that affect query speed, indexing throughput, or search concurrency.

In contrast:

Increasing the number of indexers (Option B) improves search performance by distributing indexing and search workloads across more nodes.

Workload Management (Option C) allows admins to prioritize compute and memory resources for critical searches, optimizing performance under load.

Increasing search heads (Option D) can enhance concurrency and user responsiveness by distributing search scheduling and ad-hoc query workloads.

Therefore, adjusting the phone home interval is strictly an administrative operation and has no measurable effect on Splunk search or indexing performance.

References (Splunk Enterprise Documentation):

• Deployment Server: Managing Phone Home Intervals

• Search Performance Optimization and Resource Management

• Distributed Search Architecture and Scaling Best Practices

• Workload Management Overview – Resource Allocation in Search Operations

Which of the following options in limits, conf may provide performance benefits at the forwarding tier?

Enable the indexed_realtime_use_by_default attribute.

Increase the maxKBps attribute.

Increase the parallellngestionPipelines attribute.

Increase the max_searches per_cpu attribute.

The correct answer is C. Increase the parallellngestionPipelines attribute. This is an option in limits.conf that may provide performance benefits at the forwarding tier, as it allows the forwarder to process multiple data inputs in parallel1. The parallellngestionPipelines attribute specifies the number of pipelines that the forwarder can use to ingest data from different sources1. By increasing this value, the forwarder can improve its throughput and reduce the latency of data delivery1. The other options are not effective options to provide performance benefits at the forwarding tier. Option A, enabling the indexed_realtime_use_by_default attribute, is not recommended, as it enables the forwarder to send data to the indexer as soon as it is received, which may increase the network and CPU load and degrade the performance2. Option B, increasing the maxKBps attribute, is not a good option, as it increases the maximum bandwidth, in kilobytes per second, that the forwarder can use to send data to the indexer3. This may improve the data transfer speed, but it may also saturate the network and cause congestion and packet loss3. Option D, increasing the max_searches_per_cpu attribute, is not relevant, as it only affects the search performance on the indexer or search head, not the forwarding performance on the forwarder4. Therefore, option C is the correct answer, and options A, B, and D are incorrect.

1: Configure parallel ingestion pipelines 2: Configure real-time forwarding 3: Configure forwarder output 4: Configure search performance

Which of the following artifacts are included in a Splunk diag file? (Select all that apply.)

OS settings.

Internal logs.

Customer data.

Configuration files.

The following artifacts are included in a Splunk diag file:

Internal logs. These are the log files that Splunk generates to record its own activities, such as splunkd.log, metrics.log, audit.log, and others. These logs can help troubleshoot Splunk issues and monitor Splunk performance.

Configuration files. These are the files that Splunk uses to configure various aspects of its operation, such as server.conf, indexes.conf, props.conf, transforms.conf, and others. These files can help understand Splunk settings and behavior. The following artifacts are not included in a Splunk diag file:

OS settings. These are the settings of the operating system that Splunk runs on, such as the kernel version, the memory size, the disk space, and others. These settings are not part of the Splunk diag file, but they can be collected separately using the diag --os option.

Customer data. These are the data that Splunk indexes and makes searchable, such as the rawdata and the tsidx files. These data are not part of the Splunk diag file, as they may contain sensitive or confidential information. For more information, see Generate a diagnostic snapshot of your Splunk Enterprise deployment in the Splunk documentation.

(Which of the following is a benefit of using SmartStore?)

Automatic selection of replication and search factors.

Separating storage from compute.

Knowledge Object replication.

Cluster Manager is no longer required.

According to the Splunk SmartStore Architecture Guide, the primary benefit of SmartStore is the separation of storage from compute resources within an indexer cluster. SmartStore enables Splunk to decouple indexer storage (data at rest) from the compute layer (indexers that perform searches and indexing).

With SmartStore, active (hot/warm) data remains on local disk for fast access, while older, less frequently searched (remote) data is stored in an external object storage system such as Amazon S3, Google Cloud Storage, or on-premises S3-compatible storage. This separation reduces the storage footprint on indexers, allowing organizations to scale compute and storage independently.

This architecture improves cost efficiency and scalability by:

Lowering on-premises storage costs using object storage for retention.

Enabling dynamic scaling of indexers without impacting total data availability.

Reducing replication overhead since SmartStore manages data objects efficiently.

SmartStore does not affect replication or search factors (Option A), does not handle Knowledge Object replication (Option C), and the Cluster Manager is still required (Option D) to coordinate cluster activities.

References (Splunk Enterprise Documentation):

• SmartStore Overview and Architecture Guide

• SmartStore Deployment and Configuration Manual

• Managing Storage and Compute Independence in Indexer Clusters

• Splunk Enterprise Capacity Planning – SmartStore Sizing Guidelines

(Where can files be placed in a configuration bundle on a search peer that will persist after a new configuration bundle has been deployed?)

In the $SPLUNK_HOME/etc/slave-apps//local folder.

In the $SPLUNK_HOME/etc/master-apps//local folder.

Nowhere; the entire configuration bundle is overwritten with each push.

In the $SPLUNK_HOME/etc/slave-apps/_cluster/local folder.

According to the Indexer Clustering Administration Guide, configuration bundles pushed from the Cluster Manager (Master Node) overwrite the contents of the $SPLUNK_HOME/etc/slave-apps/ directory on each search peer (indexer). However, Splunk provides a special persistent location — the _cluster app’s local directory — for files that must survive bundle redeployments.

Specifically, any configuration files placed in:

$SPLUNK_HOME/etc/slave-apps/_cluster/local/

will persist after future bundle pushes because this directory is excluded from the automatic overwrite process.

This is particularly useful for maintaining local overrides or custom configurations that should not be replaced by the Cluster Manager, such as environment-specific inputs, temporary test settings, or monitoring configurations unique to that peer.

Other directories under slave-apps are overwritten each time a configuration bundle is pushed, ensuring consistency across the cluster. Likewise, master-apps exists only on the Cluster Manager and is used for deployment, not persistence.

Thus, the _cluster/local folder is the only safe, Splunk-documented location for configurations that need to survive bundle redeployment.

References (Splunk Enterprise Documentation):

• Indexer Clustering: How Configuration Bundles Work

• Maintaining Local Configurations on Clustered Indexers

• slave-apps and _cluster App Structure and Behavior

• Splunk Enterprise Admin Manual – Cluster Configuration Management Best Practices

(The performance of a specific search is performing poorly. The search must run over All Time and is expected to have very few results. Analysis shows that the search accesses a very large number of buckets in a large index. What step would most significantly improve the performance of this search?)

Increase the disk I/O hardware performance.

Increase the number of indexing pipelines.

Set indexed_realtime_use_by_default = true in limits.conf.

Change this to a real-time search using an All Time window.

As per Splunk Enterprise Search Performance documentation, the most significant factor affecting search performance when querying across a large number of buckets is disk I/O throughput. A search that spans “All Time” forces Splunk to inspect all historical buckets (hot, warm, cold, and potentially frozen if thawed), even if only a few events match the query. This dramatically increases the amount of data read from disk, making the search bound by I/O performance rather than CPU or memory.

Increasing the number of indexing pipelines (Option B) only benefits data ingestion, not search performance. Changing to a real-time search (Option D) does not help because real-time searches are optimized for streaming new data, not historical queries. The indexed_realtime_use_by_default setting (Option C) applies only to streaming indexed real-time searches, not historical “All Time” searches.

To improve performance for such searches, Splunk documentation recommends enhancing disk I/O capability — typically through SSD storage, increased disk bandwidth, or optimized storage tiers. Additionally, creating summary indexes or accelerated data models may help for repeated “All Time” queries, but the most direct improvement comes from faster disk performance since Splunk must scan large numbers of buckets for even small result sets.

References (Splunk Enterprise Documentation):

• Search Performance Tuning and Optimization

• Understanding Bucket Search Mechanics and Disk I/O Impact

• limits.conf Parameters for Search Performance

• Storage and Hardware Sizing Guidelines for Indexers and Search Heads

Stakeholders have identified high availability for searchable data as their top priority. Which of the following best addresses this requirement?

Increasing the search factor in the cluster.

Increasing the replication factor in the cluster.

Increasing the number of search heads in the cluster.

Increasing the number of CPUs on the indexers in the cluster.

Increasing the search factor in the cluster will best address the requirement of high availability for searchable data. The search factor determines how many copies of searchable data are maintained by the cluster. A higher search factor means that more indexers can serve the data in case of a failure or a maintenance event. Increasing the replication factor will improve the availability of raw data, but not searchable data. Increasing the number of search heads or CPUs on the indexers will improve the search performance, but not the availability of searchable data. For more information, see Replication factor and search factor in the Splunk documentation.

Which of the following is unsupported in a production environment?

Cluster Manager can run on the Monitoring Console instance in smaller environments.

Search Head Cluster Deployer can run on the Monitoring Console instance in smaller environments.

Search heads in a Search Head Cluster can run on virtual machines.

Indexers in an indexer cluster can run on virtual machines.

Comprehensive and Detailed Explanation (From Splunk Enterprise Documentation)Splunk Enterprise documentation clarifies that none of the listed configurations are prohibited in production. Splunk allows the Cluster Manager to be colocated with the Monitoring Console in small deployments because both are management-plane functions and do not handle ingestion or search traffic. The documentation also states that the Search Head Cluster Deployer is not a runtime component and has minimal performance requirements, so it may be colocated with the Monitoring Console or Licensing Master when hardware resources permit.

Splunk also supports virtual machines for both search heads and indexers, provided they are deployed with dedicated CPU, storage throughput, and predictable performance. Splunk’s official hardware guidance specifies that while bare metal often yields higher performance, virtualized deployments are fully supported in production as long as sizing principles are met.

Because Splunk explicitly supports all four configurations under proper sizing and best-practice guidelines, there is no correct selection for “unsupported.” The question is outdated relative to current Splunk Enterprise recommendations.

Which of the following configuration attributes must be set in server, conf on the cluster manager in a single-site indexer cluster?

master_uri

site

replication_factor

site_replication_factor

The correct configuration attribute to set in server.conf on the cluster manager in a single-site indexer cluster is master_uri. This attribute specifies the URI of the cluster manager, which is required for the peer nodes and search heads to communicate with it1. The other attributes are not required for a single-site indexer cluster, but they are used for a multisite indexer cluster. The site attribute defines the site name for each node in a multisite indexer cluster2. The replication_factor attribute defines the number of copies of each bucket to maintain across the entire multisite indexer cluster3. The site_replication_factor attribute defines the number of copies of each bucket to maintain across each site in a multisite indexer cluster4. Therefore, option A is the correct answer, and options B, C, and D are incorrect.

1: Configure the cluster manager 2: Configure the site attribute 3: Configure the replication factor 4: Configure the site replication factor

What is the minimum reference server specification for a Splunk indexer?

12 CPU cores, 12GB RAM, 800 IOPS

16 CPU cores, 16GB RAM, 800 IOPS

24 CPU cores, 16GB RAM, 1200 IOPS

28 CPU cores, 32GB RAM, 1200 IOPS

The minimum reference server specification for a Splunk indexer is 12 CPU cores, 12GB RAM, and 800 IOPS. This specification is based on the assumption that the indexer will handle an average indexing volume of 100GB per day, with a peak of 300GB per day, and a typical search load of 1 concurrent search per 1GB of indexing volume. The other specifications are either higher or lower than the minimum requirement. For more information, see [Reference hardware] in the Splunk documentation.

Splunk Enterprise performs a cyclic redundancy check (CRC) against the first and last bytes to prevent the same file from being re-indexed if it is rotated or renamed. What is the number of bytes sampled by default?

128

512

256

64

Splunk Enterprise performs a CRC check against the first and last 256 bytes of a file by default, as stated in the inputs.conf specification. This is controlled by the initCrcLength parameter, which can be changed if needed. The CRC check helps Splunk Enterprise to avoid re-indexing the same file twice, even if it is renamed or rotated, as long as the content does not change. However, this also means that Splunk Enterprise might miss some files that have the same CRC but different content, especially if they have identical headers. To avoid this, the crcSalt parameter can be used to add some extra information to the CRC calculation, such as the full file path or a custom string. This ensures that each file has a unique CRC and is indexed by Splunk Enterprise. You can read more about crcSalt and initCrcLength in the How log file rotation is handled documentation.

How does the average run time of all searches relate to the available CPU cores on the indexers?

Average run time is independent of the number of CPU cores on the indexers.

Average run time decreases as the number of CPU cores on the indexers decreases.

Average run time increases as the number of CPU cores on the indexers decreases.

Average run time increases as the number of CPU cores on the indexers increases.

The average run time of all searches increases as the number of CPU cores on the indexers decreases. The CPU cores are the processing units that execute the instructions and calculations for the data. The number of CPU cores on the indexers affects the search performance, because the indexers are responsible for retrieving and filtering the data from the indexes. The more CPU cores the indexers have, the faster they can process the data and return the results. The less CPU cores the indexers have, the slower they can process the data and return the results. Therefore, the average run time of all searches is inversely proportional to the number of CPU cores on the indexers. The average run time of all searches is not independent of the number of CPU cores on the indexers, because the CPU cores are an important factor for the search performance. The average run time of all searches does not decrease as the number of CPU cores on the indexers decreases, because this would imply that the search performance improves with less CPU cores, which is not true. The average run time of all searches does not increase as the number of CPU cores on the indexers increases, because this would imply that the search performance worsens with more CPU cores, which is not true

Where in the Job Inspector can details be found to help determine where performance is affected?

Search Job Properties > runDuration

Search Job Properties > runtime

Job Details Dashboard > Total Events Matched

Execution Costs > Components

This is where in the Job Inspector details can be found to help determine where performance is affected, as it shows the time and resources spent by each component of the search, such as commands, subsearches, lookups, and post-processing1. The Execution Costs > Components section can help identify the most expensive or inefficient parts of the search, and suggest ways to optimize or improve the search performance1. The other options are not as useful as the Execution Costs > Components section for finding performance issues. Option A, Search Job Properties > runDuration, shows the total time, in seconds, that the search took to run2. This can indicate the overall performance of the search, but it does not provide any details on the specific components or factors that affected the performance. Option B, Search Job Properties > runtime, shows the time, in seconds, that the search took to run on the search head2. This can indicate the performance of the search head, but it does not account for the time spent on the indexers or the network. Option C, Job Details Dashboard > Total Events Matched, shows the number of events that matched the search criteria3. This can indicate the size and scope of the search, but it does not provide any information on the performance or efficiency of the search. Therefore, option D is the correct answer, and options A, B, and C are incorrect.

1: Execution Costs > Components 2: Search Job Properties 3: Job Details Dashboard

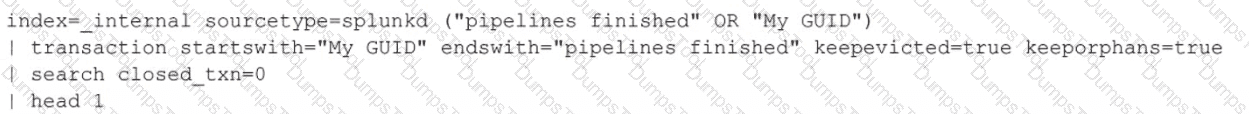

A Splunk instance has crashed, but no crash log was generated. There is an attempt to determine what user activity caused the crash by running the following search:

What does searching for closed_txn=0 do in this search?

Filters results to situations where Splunk was started and stopped multiple times.

Filters results to situations where Splunk was started and stopped once.

Filters results to situations where Splunk was stopped and then immediately restarted.

Filters results to situations where Splunk was started, but not stopped.

Searching for closed_txn=0 in this search filters results to situations where Splunk was started, but not stopped. This means that the transaction was not completed, and Splunk crashed before it could finish the pipelines. The closed_txn field is added by the transaction command, and it indicates whether the transaction was closed by an event that matches the endswith condition1. A value of 0 means that the transaction was not closed, and a value of 1 means that the transaction was closed1. Therefore, option D is the correct answer, and options A, B, and C are incorrect.

1: transaction command overview

Several critical searches that were functioning correctly yesterday are not finding a lookup table today. Which log file would be the best place to start troubleshooting?

btool.log

web_access.log

health.log

configuration_change.log

A lookup table is a file that contains a list of values that can be used to enrich or modify the data during search time1. Lookup tables can be stored in CSV files or in the KV Store1. Troubleshooting lookup tables involves identifying and resolving issues that prevent the lookup tables from being accessed, updated, or applied correctly by the Splunk searches. Some of the tools and methods that can help with troubleshooting lookup tables are:

web_access.log: This is a file that contains information about the HTTP requests and responses that occur between the Splunk web server and the clients2. This file can help troubleshoot issues related to lookup table permissions, availability, and errors, such as 404 Not Found, 403 Forbidden, or 500 Internal Server Error34.

btool output: This is a command-line tool that displays the effective configuration settings for a given Splunk component, such as inputs, outputs, indexes, props, and so on5. This tool can help troubleshoot issues related to lookup table definitions, locations, and precedence, as well as identify the source of a configuration setting6.

search.log: This is a file that contains detailed information about the execution of a search, such as the search pipeline, the search commands, the search results, the search errors, and the search performance. This file can help troubleshoot issues related to lookup table commands, arguments, fields, and outputs, such as lookup, inputlookup, outputlookup, lookup_editor, and so on .

Option B is the correct answer because web_access.log is the best place to start troubleshooting lookup table issues, as it can provide the most relevant and immediate information about the lookup table access and status. Option A is incorrect because btool output is not a log file, but a command-line tool. Option C is incorrect because health.log is a file that contains information about the health of the Splunk components, such as the indexer cluster, the search head cluster, the license master, and the deployment server. This file can help troubleshoot issues related to Splunk deployment health, but not necessarily related to lookup tables. Option D is incorrect because configuration_change.log is a file that contains information about the changes made to the Splunk configuration files, such as the user, the time, the file, and the action. This file can help troubleshoot issues related to Splunk configuration changes, but not necessarily related to lookup tables.

(A high-volume source and a low-volume source feed into the same index. Which of the following items best describe the impact of this design choice?)

Low volume data will improve the compression factor of the high volume data.

Search speed on low volume data will be slower than necessary.

Low volume data may move out of the index based on volume rather than age.

High volume data is optimized by the presence of low volume data.

The Splunk Managing Indexes and Storage Documentation explains that when multiple data sources with significantly different ingestion rates share a single index, index bucket management is governed by volume-based rotation, not by source or time. This means that high-volume data causes buckets to fill and roll more quickly, which in turn causes low-volume data to age out prematurely, even if it is relatively recent — hence Option C is correct.

Additionally, because Splunk organizes data within index buckets based on event time and storage characteristics, low-volume data mixed with high-volume data results in inefficient searches for smaller datasets. Queries that target the low-volume source will have to scan through the same large number of buckets containing the high-volume data, leading to slower-than-necessary search performance — Option B.

Compression efficiency (Option A) and performance optimization through data mixing (Option D) are not influenced by mixing volume patterns; these are determined by the event structure and compression algorithm, not source diversity. Splunk best practices recommend separating data sources into different indexes based on usage, volume, and retention requirements to optimize both performance and lifecycle management.

References (Splunk Enterprise Documentation):

• Managing Indexes and Storage – How Splunk Manages Buckets and Data Aging

• Splunk Indexing Performance and Data Organization Best Practices

• Splunk Enterprise Architecture and Data Lifecycle Management

• Best Practices for Data Volume Segregation and Retention Policies

When planning a search head cluster, which of the following is true?

All search heads must use the same operating system.

All search heads must be members of the cluster (no standalone search heads).

The search head captain must be assigned to the largest search head in the cluster.

All indexers must belong to the underlying indexer cluster (no standalone indexers).

When planning a search head cluster, the following statement is true: All indexers must belong to the underlying indexer cluster (no standalone indexers). A search head cluster is a group of search heads that share configurations, apps, and search jobs. A search head cluster requires an indexer cluster as its data source, meaning that all indexers that provide data to the search head cluster must be members of the same indexer cluster. Standalone indexers, or indexers that are not part of an indexer cluster, cannot be used as data sources for a search head cluster. All search heads do not have to use the same operating system, as long as they are compatible with the Splunk version and the indexer cluster. All search heads do not have to be members of the cluster, as standalone search heads can also search the indexer cluster, but they will not have the benefits of configuration replication and load balancing. The search head captain does not have to be assigned to the largest search head in the cluster, as the captain is dynamically elected from among the cluster members based on various criteria, such as CPU load, network latency, and search load.

Which Splunk internal field can confirm duplicate event issues from failed file monitoring?

_time

_indextime

_index_latest

latest

According to the Splunk documentation1, the _indextime field is the time when Splunk indexed the event. This field can be used to confirm duplicate event issues from failed file monitoring, as it can show you when each duplicate event was indexed and if they have different _indextime values. You can use the Search Job Inspector to inspect the search job that returns the duplicate events and check the _indextime field for each event2. The other options are false because:

The _time field is the time extracted from the event data, not the time when Splunk indexed the event. This field may not reflect the actual indexing time, especially if the event data has a different time zone or format than the Splunk server1.

The _index_latest field is not a valid Splunk internal field, as it does not exist in the Splunk documentation or the Splunk data model3.

The latest field is a field that represents the latest time bound of a search, not the time when Splunk indexed the event. This field is used to specify the time range of a search, along with the earliest field4.

(What is a recommended way to improve search performance?)

Use the shortest query possible.

Filter as much as possible in the initial search.

Use non-streaming commands as early as possible.

Leverage the not expression to limit returned results.

Splunk Enterprise Search Optimization documentation consistently emphasizes that filtering data as early as possible in the search pipeline is the most effective way to improve search performance. The base search (the part before the first pipe |) determines the volume of raw events Splunk retrieves from the indexers. Therefore, by applying restrictive conditions early—such as time ranges, indexed fields, and metadata filters—you can drastically reduce the number of events that need to be fetched and processed downstream.

The best practice is to use indexed field filters (e.g., index=security sourcetype=syslog host=server01) combined with search or where clauses at the start of the query. This minimizes unnecessary data movement between indexers and the search head, improving both search speed and system efficiency.

Using non-streaming commands early (Option C) can degrade performance because they require full result sets before producing output. Likewise, focusing solely on shortening queries (Option A) or excessive use of the not operator (Option D) does not guarantee efficiency, as both may still process large datasets.

Filtering early leverages Splunk’s distributed search architecture to limit data at the indexer level, reducing processing load and network transfer.

References (Splunk Enterprise Documentation):

• Search Performance Tuning and Optimization Guide

• Best Practices for Writing Efficient SPL Queries

• Understanding Streaming and Non-Streaming Commands

• Search Job Inspector: Analyzing Execution Costs

On search head cluster members, where in $splunk_home does the Splunk Deployer deploy app content by default?

etc/apps/

etc/slave-apps/

etc/shcluster/

etc/deploy-apps/

According to the Splunk documentation1, the Splunk Deployer deploys app content to the etc/slave-apps/ directory on the search head cluster members by default. This directory contains the apps that the deployer distributes to the members as part of the configuration bundle. The other options are false because:

The etc/apps/ directory contains the apps that are installed locally on each member, not the apps that are distributed by the deployer2.

The etc/shcluster/ directory contains the configuration files for the search head cluster, not the apps that are distributed by the deployer3.

The etc/deploy-apps/ directory is not a valid Splunk directory, as it does not exist in the Splunk file system structure4.

An indexer cluster is being designed with the following characteristics:

• 10 search peers

• Replication Factor (RF): 4

• Search Factor (SF): 3

• No SmartStore usage

How many search peers can fail before data becomes unsearchable?

Zero peers can fail.

One peer can fail.

Three peers can fail.

Four peers can fail.

Three peers can fail. This is the maximum number of search peers that can fail before data becomes unsearchable in the indexer cluster with the given characteristics. The searchability of the data depends on the Search Factor, which is the number of searchable copies of each bucket that the cluster maintains across the set of peer nodes1. In this case, the Search Factor is 3, which means that each bucket has three searchable copies distributed among the 10 search peers. If three or fewer search peers fail, the cluster can still serve the data from the remaining searchable copies. However, if four or more search peers fail, the cluster may lose some searchable copies and the data may become unsearchable. The other options are not correct, as they either underestimate or overestimate the number of search peers that can fail before data becomes unsearchable. Therefore, option C is the correct answer, and options A, B, and D are incorrect.

1: Configure the search factor

Which of the following clarification steps should be taken if apps are not appearing on a deployment client? (Select all that apply.)

Check serverclass.conf of the deployment server.

Check deploymentclient.conf of the deployment client.

Check the content of SPLUNK_HOME/etc/apps of the deployment server.

Search for relevant events in splunkd.log of the deployment server.

The following clarification steps should be taken if apps are not appearing on a deployment client:

Check serverclass.conf of the deployment server. This file defines the server classes and the apps and configurations that they should receive from the deployment server. Make sure that the deployment client belongs to the correct server class and that the server class has the desired apps and configurations.

Check deploymentclient.conf of the deployment client. This file specifies the deployment server that the deployment client contacts and the client name that it uses. Make sure that the deployment client is pointing to the correct deployment server and that the client name matches the server class criteria.

Search for relevant events in splunkd.log of the deployment server. This file contains information about the deployment server activities, such as sending apps and configurations to the deployment clients, detecting client check-ins, and logging any errors or warnings. Look for any events that indicate a problem with the deployment server or the deployment client.

Checking the content of SPLUNK_HOME/etc/apps of the deployment server is not a necessary clarification step, as this directory does not contain the apps and configurations that are distributed to the deployment clients. The apps and configurations for the deployment server are stored in SPLUNK_HOME/etc/deployment-apps. For more information, see Configure deployment server and clients in the Splunk documentation.

(On which Splunk components does the Splunk App for Enterprise Security place the most load?)

Indexers

Cluster Managers

Search Heads

Heavy Forwarders

According to Splunk’s Enterprise Security (ES) Installation and Sizing Guide, the majority of processing and computational load generated by the Splunk App for Enterprise Security is concentrated on the Search Head(s).

This is because Splunk ES is built around a search-driven correlation model — it continuously runs scheduled correlation searches, data model accelerations, and notables generation jobs. These operations rely on the search head tier’s CPU, memory, and I/O resources rather than on indexers. ES also performs extensive data model summarization, CIM normalization, and real-time alerting, all of which are search-intensive operations.

While indexers handle data ingestion and indexing, they are not heavily affected by ES beyond normal search request processing. The Cluster Manager only coordinates replication and plays no role in search execution, and Heavy Forwarders serve as data collection or parsing points with minimal analytical load.

Splunk officially recommends deploying ES on a dedicated Search Head Cluster (SHC) to isolate its high CPU and memory demands from other workloads. For large-scale environments, horizontal scaling via SHC ensures consistent performance and stability.

References (Splunk Enterprise Documentation):

• Splunk Enterprise Security Installation and Configuration Guide

• Search Head Sizing for Splunk Enterprise Security

• Enterprise Security Overview – Workload Distribution and Performance Impact

• Splunk Architecture and Capacity Planning for ES Deployments

When should a dedicated deployment server be used?

When there are more than 50 search peers.

When there are more than 50 apps to deploy to deployment clients.

When there are more than 50 deployment clients.

When there are more than 50 server classes.

A dedicated deployment server is a Splunk instance that manages the distribution of configuration updates and apps to a set of deployment clients, such as forwarders, indexers, or search heads. A dedicated deployment server should be used when there are more than 50 deployment clients, because this number exceeds the recommended limit for a non-dedicated deployment server. A non-dedicated deployment server is a Splunk instance that also performs other roles, such as indexing or searching. Using a dedicated deployment server can improve the performance, scalability, and reliability of the deployment process. Option C is the correct answer. Option A is incorrect because the number of search peers does not affect the need for a dedicated deployment server. Search peers are indexers that participate in a distributed search. Option B is incorrect because the number of apps to deploy does not affect the need for a dedicated deployment server. Apps are packages of configurations and assets that provide specific functionality or views in Splunk. Option D is incorrect because the number of server classes does not affect the need for a dedicated deployment server. Server classes are logical groups of deployment clients that share the same configuration updates and apps12

1: https://docs.splunk.com/Documentation/Splunk/9.1.2/Updating/Aboutdeploymentserver 2: https://docs.splunk.com/Documentation/Splunk/9.1.2/Updating/Whentousedeploymentserver

A search head has successfully joined a single site indexer cluster. Which command is used to configure the same search head to join another indexer cluster?

splunk add cluster-config

splunk add cluster-master

splunk edit cluster-config

splunk edit cluster-master

The splunk add cluster-master command is used to configure the same search head to join another indexer cluster. A search head can search multiple indexer clusters by adding multiple cluster-master entries in its server.conf file. The splunk add cluster-master command can be used to add a new cluster-master entry to the server.conf file, by specifying the host name and port number of the master node of the other indexer cluster. The splunk add cluster-config command is used to configure the search head to join the first indexer cluster, not the second one. The splunk edit cluster-config command is used to edit the existing cluster configuration of the search head, not to add a new one. The splunk edit cluster-master command does not exist, and it is not a valid command.

A customer has a Search Head Cluster (SHC) with site1 and site2. Site1 has five search heads and Site2 has four. Site1 search heads are preferred captains. What action should be taken on Site2 in a network failure between the sites?

Disable elections and set a static captain, then restart the cluster.

No action is required.

Set a dynamic captain manually and restart.

Disable elections and set a static captain, notifying all members.

Comprehensive and Detailed Explanation (From Splunk Enterprise Documentation)Splunk’s Search Head Clustering documentation explains that the cluster uses a majority-based election system. A captain is elected only when a node sees more than half of the cluster. In a two-site design where site1 has the majority of members, Splunk states that the majority site continues normal operation during a network partition. The minority site (site2) is not allowed to elect a captain and should not promote itself.

Splunk specifically warns administrators not to enable static captain on a minority site during a network split. Doing so creates two independent clusters, leading to configuration divergence and severe data-consistency issues. The documentation emphasizes that static captain should only be used for a complete loss of majority, not for a site partition.

Because Site1 maintains majority, it remains the active cluster and site2 does not perform any actions. Splunk states that minority-site members should simply wait until network communication is restored.

Thus the correct answer is B: No action is required.

What is needed to ensure that high-velocity sources will not have forwarding delays to the indexers?

Increase the default value of sessionTimeout in server, conf.

Increase the default limit for maxKBps in limits.conf.

Decrease the value of forceTimebasedAutoLB in outputs. conf.

Decrease the default value of phoneHomelntervallnSecs in deploymentclient .conf.

To ensure that high-velocity sources will not have forwarding delays to the indexers, the default limit for maxKBps in limits.conf should be increased. This parameter controls the maximum bandwidth that a forwarder can use to send data to the indexers. By default, it is set to 256 KBps, which may not be sufficient for high-volume data sources. Increasing this limit can reduce the forwarding latency and improve the performance of the forwarders. However, this should be done with caution, as it may affect the network bandwidth and the indexer load. Option B is the correct answer. Option A is incorrect because the sessionTimeout parameter in server.conf controls the duration of a TCP connection between a forwarder and an indexer, not the bandwidth limit. Option C is incorrect because the forceTimebasedAutoLB parameter in outputs.conf controls the frequency of load balancing among the indexers, not the bandwidth limit. Option D is incorrect because the phoneHomelntervallnSecs parameter in deploymentclient.conf controls the interval at which a forwarder contacts the deployment server, not the bandwidth limit12

1: https://docs.splunk.com/Documentation/Splunk/9.1.2/Admin/Limitsconf#limits.conf.spec 2: https://docs.splunk.com/Documentation/Splunk/9.1.2/Forwarding/Routeandfilterdatad#Set_the_maximum_bandwidth_usage_for_a_forwarder

A Splunk architect has inherited the Splunk deployment at Buttercup Games and end users are complaining that the events are inconsistently formatted for a web source. Further investigation reveals that not all weblogs flow through the same infrastructure: some of the data goes through heavy forwarders and some of the forwarders are managed by another department.

Which of the following items might be the cause of this issue?

The search head may have different configurations than the indexers.

The data inputs are not properly configured across all the forwarders.

The indexers may have different configurations than the heavy forwarders.

The forwarders managed by the other department are an older version than the rest.

The indexers may have different configurations than the heavy forwarders, which might cause the issue of inconsistently formatted events for a web sourcetype. The heavy forwarders perform parsing and indexing on the data before sending it to the indexers. If the indexers have different configurations than the heavy forwarders, such as different props.conf or transforms.conf settings, the data may be parsed or indexed differently on the indexers, resulting in inconsistent events. The search head configurations do not affect the event formatting, as the search head does not parse or index the data. The data inputs configurations on the forwarders do not affect the event formatting, as the data inputs only determine what data to collect and how to monitor it. The forwarder version does not affect the event formatting, as long as the forwarder is compatible with the indexer. For more information, see [Heavy forwarder versus indexer] and [Configure event processing] in the Splunk documentation.

Which of the following items are important sizing parameters when architecting a Splunk environment? (select all that apply)

Number of concurrent users.

Volume of incoming data.

Existence of premium apps.

Number of indexes.

Number of concurrent users: This is an important factor because it affects the search performance and resource utilization of the Splunk environment. More users mean more concurrent searches, which require more CPU, memory, and disk I/O. The number of concurrent users also determines the search head capacity and the search head clustering configuration12

Volume of incoming data: This is another crucial factor because it affects the indexing performance and storage requirements of the Splunk environment. More data means more indexing throughput, which requires more CPU, memory, and disk I/O. The volume of incoming data also determines the indexer capacity and the indexer clustering configuration13

Existence of premium apps: This is a relevant factor because some premium apps, such as Splunk Enterprise Security and Splunk IT Service Intelligence, have additional requirements and recommendations for the Splunk environment. For example, Splunk Enterprise Security requires a dedicated search head cluster and a minimum of 12 CPU cores per search head. Splunk IT Service Intelligence requires a minimum of 16 CPU cores and 64 GB of RAM per search head45

(What is the best way to configure and manage receiving ports for clustered indexers?)

Use Splunk Web to create the receiving port on each peer node.

Define the receiving port in /etc/deployment-apps/cluster-app/local/inputs.conf and deploy it to the peer nodes.

Run the splunk enable listen command on each peer node.

Define the receiving port in /etc/manager-apps/_cluster/local/inputs.conf and push it to the peer nodes.

According to the Indexer Clustering Administration Guide, the most efficient and Splunk-recommended way to configure and manage receiving ports for all clustered indexers (peer nodes) is through the Cluster Manager (previously known as the Master Node).

In a clustered environment, configuration changes that affect all peer nodes—such as receiving port definitions—should be managed centrally. The correct procedure is to define the inputs configuration file (inputs.conf) within the Cluster Manager’s manager-apps directory. Specifically, the configuration is placed in:

$SPLUNK_HOME/etc/manager-apps/_cluster/local/inputs.conf

and then deployed to all peers using the configuration bundle push mechanism.

This centralized approach ensures consistency across all peer nodes, prevents manual configuration drift, and allows Splunk to maintain uniform ingestion behavior across the cluster.

Running splunk enable listen on each peer (Option C) or manually configuring inputs via Splunk Web (Option A) introduces inconsistencies and is not recommended in clustered deployments. Using the deployment-apps path (Option B) is meant for deployment servers, not for cluster management.

References (Splunk Enterprise Documentation):

• Indexer Clustering: Configure Peer Nodes via Cluster Manager

• Deploy Configuration Bundles from the Cluster Manager

• inputs.conf Reference – Receiving Data Configuration

• Splunk Enterprise Admin Manual – Managing Clustered Indexers

Which tool(s) can be leveraged to diagnose connection problems between an indexer and forwarder? (Select all that apply.)

telnet

tcpdump

splunk btool

splunk btprobe

The telnet and tcpdump tools can be leveraged to diagnose connection problems between an indexer and forwarder. The telnet tool can be used to test the connectivity and port availability between the indexer and forwarder. The tcpdump tool can be used to capture and analyze the network traffic between the indexer and forwarder. The splunk btool command can be used to check the configuration files of the indexer and forwarder, but it cannot diagnose the connection problems. The splunk btprobe command does not exist, and it is not a valid tool.

Which of the following commands is used to clear the KV store?

splunk clean kvstore

splunk clear kvstore

splunk delete kvstore

splunk reinitialize kvstore

The splunk clean kvstore command is used to clear the KV store. This command will delete all the collections and documents in the KV store and reset it to an empty state. This command can be useful for troubleshooting KV store issues or resetting the KV store data. The splunk clear kvstore, splunk delete kvstore, and splunk reinitialize kvstore commands are not valid Splunk commands. For more information, see Use the CLI to manage the KV store in the Splunk documentation.

What is the expected minimum amount of storage required for data across an indexer cluster with the following input and parameters?

• Raw data = 15 GB per day

• Index files = 35 GB per day

• Replication Factor (RF) = 2

• Search Factor (SF) = 2

85 GB per day

50 GB per day

100 GB per day

65 GB per day

The correct answer is C. 100 GB per day. This is the expected minimum amount of storage required for data across an indexer cluster with the given input and parameters. The storage requirement can be calculated by adding the raw data size and the index files size, and then multiplying by the Replication Factor and the Search Factor1. In this case, the calculation is:

(15 GB + 35 GB) x 2 x 2 = 100 GB

The Replication Factor is the number of copies of each bucket that the cluster maintains across the set of peer nodes2. The Search Factor is the number of searchable copies of each bucket that the cluster maintains across the set of peer nodes3. Both factors affect the storage requirement, as they determine how many copies of the data are stored and searchable on the indexers. The other options are not correct, as they do not match the result of the calculation. Therefore, option C is the correct answer, and options A, B, and D are incorrect.

1: Estimate storage requirements 2: About indexer clusters and index replication 3: Configure the search factor

(When planning user management for a new Splunk deployment, which task can be disregarded?)

Identify users authenticating with Splunk native authentication.

Identify users authenticating with Splunk using LDAP or SAML.

Determine the number of users present in Splunk log events.

Determine the capabilities users need within the Splunk environment.

According to the Splunk Enterprise User Authentication and Authorization Guide, effective user management during deployment planning involves identifying how users will authenticate (native, LDAP, or SAML) and defining what roles and capabilities they will need to perform their tasks.

However, counting or analyzing the number of users who appear in Splunk log events (Option C) is not part of user management planning. This metric relates to audit and monitoring, not access provisioning or role assignment.

A proper user management plan should address:

Authentication method selection (native, LDAP, or SAML).

User mapping and provisioning workflows from existing identity stores.

Role-based access control (RBAC) — assigning users appropriate permissions via Splunk roles and capabilities.

Administrative governance — ensuring access policies align with compliance requirements.

Determining the number of users visible in log events provides no operational value when planning Splunk authentication or authorization architecture. Therefore, this task can be safely disregarded during initial planning.

References (Splunk Enterprise Documentation):

• User Authentication and Authorization in Splunk Enterprise

• Configuring LDAP and SAML Authentication

• Managing Users, Roles, and Capabilities

• Splunk Deployment Planning Manual – Security and Access Control Planning