A company is designing its serving layer for data that is in cloud storage. Multiple terabytes of the data will be used for reporting. Some data does not have a clear use case but could be useful for experimental analysis. This experimentation data changes frequently and is sometimes wiped out and replaced completely in a few days.

The company wants to centralize access control, provide a single point of connection for the end-users, and maintain data governance.

What solution meets these requirements while MINIMIZING costs, administrative effort, and development overhead?

Import the data used for reporting into a Snowflake schema with native tables. Then create external tables pointing to the cloud storage folders used for the experimentation data. Then create two different roles with grants to the different datasets to match the different user personas, and grant these roles to the corresponding users.

Import all the data in cloud storage to be used for reporting into a Snowflake schema with native tables. Then create a role that has access to this schema and manage access to the data through that role.

Import all the data in cloud storage to be used for reporting into a Snowflake schema with native tables. Then create two different roles with grants to the different datasets to match the different user personas, and grant these roles to the corresponding users.

Import the data used for reporting into a Snowflake schema with native tables. Then create views that have SELECT commands pointing to the cloud storage files for the experimentation data. Then create two different roles to match the different user personas, and grant these roles to the corresponding users.

The most cost-effective and administratively efficient solution is to use a combination of native and external tables. Native tables for reporting data ensure performance and governance, while external tables allow for flexibility with frequently changing experimental data. Creating roles with specific grants to datasets aligns with the principle of least privilege, centralizing access control and simplifying user management12.

References

•Snowflake Documentation on Optimizing Cost1.

•Snowflake Documentation on Controlling Cost2.

Which of the following are characteristics of how row access policies can be applied to external tables? (Choose three.)

An external table can be created with a row access policy, and the policy can be applied to the VALUE column.

A row access policy can be applied to the VALUE column of an existing external table.

A row access policy cannot be directly added to a virtual column of an external table.

External tables are supported as mapping tables in a row access policy.

While cloning a database, both the row access policy and the external table will be cloned.

A row access policy cannot be applied to a view created on top of an external table.

These three statements are true according to the Snowflake documentation and the web search results. A row access policy is a feature that allows filtering rows based on user-defined conditions. A row access policy can be applied to an external table, which is a table that reads data from external files in a stage. However, there are some limitations and considerations for using row access policies with external tables.

An external table can be created with a row access policy by using the WITH ROW ACCESS POLICY clause in the CREATE EXTERNAL TABLE statement. The policy can be applied to theVALUE column, which is the column that contains the raw data from the external files in a VARIANT data type1.

A row access policy can also be applied to the VALUE column of an existing external table by using the ALTER TABLE statement with the SET ROW ACCESS POLICY clause2.

A row access policy cannot be directly added to a virtual column of an external table. A virtual column is a column that is derived from the VALUE column using an expression. To apply a row access policy to a virtual column, the policy must be applied to the VALUE column and the expression must be repeated in the policy definition3.

External tables are not supported as mapping tables in a row access policy. A mapping table is a table that is used to determine the access rights of users or roles based on some criteria. Snowflake does not support using an external table as a mapping table because it may cause performance issues or errors4.

While cloning a database, Snowflake clones the row access policy, but not the external table. Therefore, the policy in the cloned database refers to a table that is not present in the cloned database. To avoid this issue, the external table must be manually cloned or recreated in the cloned database4.

A row access policy can be applied to a view created on top of an external table. The policy can be applied to the view itself or to the underlying external table. However, if the policy is applied to the view, the view must be a secure view, which is a view that hides the underlying data and the view definition from unauthorized users5.

CREATE EXTERNAL TABLE | Snowflake Documentation

ALTER EXTERNAL TABLE | Snowflake Documentation

Understanding Row Access Policies | Snowflake Documentation

Snowflake Data Governance: Row Access Policy Overview

Secure Views | Snowflake Documentation

What are characteristics of Dynamic Data Masking? (Select TWO).

A masking policy that Is currently set on a table can be dropped.

A single masking policy can be applied to columns in different tables.

A masking policy can be applied to the value column of an external table.

The role that creates the masking policy will always see unmasked data In query results

A masking policy can be applied to a column with the GEOGRAPHY data type.

Dynamic Data Masking is a feature that allows masking sensitive data in query results based on the role of the user who executes the query. A masking policy is a user-defined function that specifies the masking logic and can be applied to one or more columns in one or more tables. A masking policy that is currently set on a table can be dropped using the ALTER TABLE command. A single masking policy can be applied to columns in different tables using the ALTER TABLE command with the SET MASKING POLICY clause. The other options are either incorrect or not supported by Snowflake. A masking policy cannot be applied to the value column of an external table, as external tables do not support column-level security. The role that creates the masking policy will not always see unmasked data in query results, as the masking policy can be applied to the owner role as well. A masking policy cannot be applied to a column with the GEOGRAPHY data type, as Snowflake only supports masking policies for scalar data types. References: Snowflake Documentation: Dynamic Data Masking, Snowflake Documentation: ALTER TABLE

An Architect with the ORGADMIN role wants to change a Snowflake account from an Enterprise edition to a Business Critical edition.

How should this be accomplished?

Run an ALTER ACCOUNT command and create a tag of EDITION and set the tag to Business Critical.

Use the account's ACCOUNTADMIN role to change the edition.

Failover to a new account in the same region and specify the new account's edition upon creation.

Contact Snowflake Support and request that the account's edition be changed.

To change the edition of a Snowflake account, an organization administrator (ORGADMIN) cannot directly alter the account settings through SQL commands or the Snowflake interface. The proper procedure is to contact Snowflake Support to request an edition change for the account. This ensures that the change is managed correctly and aligns with Snowflake’s operational protocols.

What considerations need to be taken when using database cloning as a tool for data lifecycle management in a development environment? (Select TWO).

Any pipes in the source are not cloned.

Any pipes in the source referring to internal stages are not cloned.

Any pipes in the source referring to external stages are not cloned.

The clone inherits all granted privileges of all child objects in the source object, including the database.

The clone inherits all granted privileges of all child objects in the source object, excluding the database.

A media company needs a data pipeline that will ingest customer review data into a Snowflake table, and apply some transformations. The company also needs to use Amazon Comprehend to do sentiment analysis and make the de-identified final data set available publicly for advertising companies who use different cloud providers in different regions.

The data pipeline needs to run continuously ang efficiently as new records arrive in the object storage leveraging event notifications. Also, the operational complexity, maintenance of the infrastructure, including platform upgrades and security, and the development effort should be minimal.

Which design will meet these requirements?

Ingest the data using COPY INTO and use streams and tasks to orchestrate transformations. Export the data into Amazon S3 to do model inference with Amazon Comprehend and ingest the data back into a Snowflake table. Then create a listing in the Snowflake Marketplace to make the data available to other companies.

Ingest the data using Snowpipe and use streams and tasks to orchestrate transformations. Create an external function to do model inference with Amazon Comprehend and write the final records to a Snowflake table. Then create a listing in the Snowflake Marketplace to make the data available to other companies.

Ingest the data into Snowflake using Amazon EMR and PySpark using the Snowflake Spark connector. Apply transformations using another Spark job. Develop a python program to do model inference by leveraging the Amazon Comprehend text analysis API. Then write the results to a Snowflake table and create a listing in the Snowflake Marketplace to make the data available to other companies.

Ingest the data using Snowpipe and use streams and tasks to orchestrate transformations. Export the data into Amazon S3 to do model inference with Amazon Comprehend and ingest the data back into a Snowflake table. Then create a listing in the Snowflake Marketplace to make the data available to other companies.

This design meets all the requirements for the data pipeline. Snowpipe is a feature that enables continuous data loading into Snowflake from object storage using event notifications. It is efficient, scalable, and serverless, meaning it does not require any infrastructure or maintenance from the user. Streams and tasks are features that enable automated data pipelines within Snowflake, using change data capture and scheduled execution. They are also efficient, scalable, and serverless, and they simplify the data transformation process. External functions are functions that can invoke external services or APIs from within Snowflake. They can be used to integrate with Amazon Comprehend and perform sentiment analysis on the data. The results can be written back to a Snowflake table using standard SQL commands. Snowflake Marketplace is a platform that allows data providers to share data with data consumers across different accounts, regions, and cloud platforms. It is a secure and easy way to make data publicly available to other companies.

Snowpipe Overview | Snowflake Documentation

Introduction to Data Pipelines | Snowflake Documentation

External Functions Overview | Snowflake Documentation

Snowflake Data Marketplace Overview | Snowflake Documentation

What integration object should be used to place restrictions on where data may be exported?

Stage integration

Security integration

Storage integration

API integration

In Snowflake, a storage integration is used to define and configure external cloud storage that Snowflake will interact with. This includes specifying security policies for access control. One of the main features of storage integrations is the ability to set restrictions on where data may be exported. This is done by binding the storage integration to specific cloud storage locations, thereby ensuring that Snowflake can only access those locations. It helps to maintain control over the data and complies with data governance and security policies by preventing unauthorized data exports to unspecified locations.

A company has built a data pipeline using Snowpipe to ingest files from an Amazon S3 bucket. Snowpipe is configured to load data into staging database tables. Then a task runs to load the data from the staging database tables into the reporting database tables.

The company is satisfied with the availability of the data in the reporting database tables, but the reporting tables are not pruning effectively. Currently, a size 4X-Large virtual warehouse is being used to query all of the tables in the reporting database.

What step can be taken to improve the pruning of the reporting tables?

Eliminate the use of Snowpipe and load the files into internal stages using PUT commands.

Increase the size of the virtual warehouse to a size 5X-Large.

Use an ORDER BY

Create larger files for Snowpipe to ingest and ensure the staging frequency does not exceed 1 minute.

Effective pruning in Snowflake relies on the organization of data within micro-partitions. By using an ORDER BY clause with clustering keys when loading data into the reporting tables, Snowflake can better organize the data within micro-partitions. This organization allows Snowflake to skip over irrelevant micro-partitions during a query, thus improving query performance and reducing the amount of data scanned12.

References =

•Snowflake Documentation on micro-partitions and data clustering2

•Community article on recognizing unsatisfactory pruning and improving it1

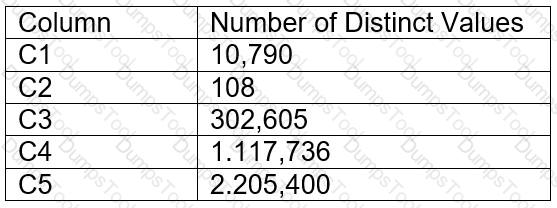

A table contains five columns and it has millions of records. The cardinality distribution of the columns is shown below:

Column C4 and C5 are mostly used by SELECT queries in the GROUP BY and ORDER BY clauses. Whereas columns C1, C2 and C3 are heavily used in filter and join conditions of SELECT queries.

The Architect must design a clustering key for this table to improve the query performance.

Based on Snowflake recommendations, how should the clustering key columns be ordered while defining the multi-column clustering key?

C5, C4, C2

C3, C4, C5

C1, C3, C2

C2, C1, C3

According to the Snowflake documentation, the following are some considerations for choosing clustering for a table1:

Clustering is optimal when either:

You require the fastest possible response times, regardless of cost.

Your improved query performance offsets the credits required to cluster and maintain the table.

Clustering is most effective when the clustering key is used in the following types of query predicates:

Filter predicates (e.g. WHERE clauses)

Join predicates (e.g. ON clauses)

Grouping predicates (e.g. GROUP BY clauses)

Sorting predicates (e.g. ORDER BY clauses)

Clustering is less effective when the clustering key is not used in any of the above query predicates, or when the clustering key is used in a predicate that requires a function or expression to be applied to the key (e.g. DATE_TRUNC, TO_CHAR, etc.).

For most tables, Snowflake recommends a maximum of 3 or 4 columns (or expressions) per key. Adding more than 3-4 columns tends to increase costs more than benefits.

Based on these considerations, the best option for the clustering key columns is C. C1, C3, C2, because:

These columns are heavily used in filter and join conditions of SELECT queries, which are the most effective types of predicates for clustering.

These columns have high cardinality, which means they have many distinct values and can help reduce the clustering skew and improve the compression ratio.

These columns are likely to be correlated with each other, which means they can help co-locate similar rows in the same micro-partitions and improve the scan efficiency.

These columns do not require any functions or expressions to be applied to them, which means they can be directly used in the predicates without affecting the clustering.

1: Considerations for Choosing Clustering for a Table | Snowflake Documentation

Which technique will efficiently ingest and consume semi-structured data for Snowflake data lake workloads?

IDEF1X

Schema-on-write

Schema-on-read

Information schema

Option C is the correct answer because schema-on-read is a technique that allows Snowflake to ingest and consume semi-structured data without requiring a predefined schema. Snowflake supports various semi-structured data formats such as JSON, Avro, ORC, Parquet, and XML, and provides native data types (ARRAY, OBJECT, and VARIANT) for storing them. Snowflake also provides native support for querying semi-structured data using SQL and dot notation. Schema-on-read enables Snowflake to query semi-structured data at the same speed as performing relational queries while preserving the flexibility of schema-on-read. Snowflake’s near-instant elasticity rightsizes compute resources, and consumption-based pricing ensures you only pay for what you use.

Option A is incorrect because IDEF1X is a data modeling technique that defines the structure and constraints of relational data using diagrams and notations. IDEF1X is not suitable for ingesting and consuming semi-structured data, which does not have a fixed schema or structure.

Option B is incorrect because schema-on-write is a technique that requires defining a schema before loading and processing data. Schema-on-write is not efficient for ingesting and consuming semi-structured data, which may have varying or complex structures that are difficult to fit into a predefined schema. Schema-on-write also introduces additional overhead and complexity for data transformation and validation.

Option D is incorrect because information schema is a set of metadata views that provide information about the objects and privileges in a Snowflake database. Information schema is not a technique for ingesting and consuming semi-structured data, but rather a way of accessing metadata about the data.

A media company needs a data pipeline that will ingest customer review data into a Snowflake table, and apply some transformations. The company also needs to use Amazon Comprehend to do sentiment analysis and make the de-identified final data set available publicly for advertising companies who use different cloud providers in different regions.

The data pipeline needs to run continuously and efficiently as new records arrive in the object storage leveraging event notifications. Also, the operational complexity, maintenance of the infrastructure, including platform upgrades and security, and the development effort should be minimal.

Which design will meet these requirements?

Ingest the data using copy into and use streams and tasks to orchestrate transformations. Export the data into Amazon S3 to do model inference with Amazon Comprehend and ingest the data back into a Snowflake table. Then create a listing in the Snowflake Marketplace to make the data available to other companies.

Ingest the data using Snowpipe and use streams and tasks to orchestrate transformations. Create an external function to do model inference with Amazon Comprehend and write the final records to a Snowflake table. Then create a listing in the Snowflake Marketplace to make the data available to other companies.

Ingest the data into Snowflake using Amazon EMR and PySpark using the Snowflake Spark connector. Apply transformations using another Spark job. Develop a python program to do model inference by leveraging the Amazon Comprehend text analysis API. Then write the results to a Snowflake table and create a listing in the Snowflake Marketplace to make the data available to other companies.

Ingest the data using Snowpipe and use streams and tasks to orchestrate transformations. Export the data into Amazon S3 to do model inference with Amazon Comprehend and ingest the data back into a Snowflake table. Then create a listing in the Snowflake Marketplace to make the data available to other companies.

Option B is the best design to meet the requirements because it uses Snowpipe to ingest the data continuously and efficiently as new records arrive in the object storage, leveraging event notifications. Snowpipe is a service that automates the loading of data from external sources into Snowflake tables1. It also uses streams and tasks to orchestrate transformations on the ingested data. Streams are objects that store the change history of a table, and tasks are objects that execute SQL statements on a schedule or when triggered by another task2. Option B also uses an external function to do model inference with Amazon Comprehend and write the final records to a Snowflake table. An external function is a user-defined function that calls an external API, such as Amazon Comprehend, to perform computations that are not natively supported by Snowflake3. Finally, option B uses the Snowflake Marketplace to make the de-identified final data set available publicly for advertising companies who use different cloud providers in different regions. The Snowflake Marketplace is a platform that enables data providers to list and share their data sets with data consumers, regardless of the cloud platform or region they use4.

Option A is not the best design because it uses copy into to ingest the data, which is not as efficient and continuous as Snowpipe. Copy into is a SQL command that loads data from files into a table in a single transaction. It also exports the data into Amazon S3 to do model inference with Amazon Comprehend, which adds an extra step and increases the operational complexity and maintenance of the infrastructure.

Option C is not the best design because it uses Amazon EMR and PySpark to ingest and transform the data, which also increases the operational complexity and maintenance of the infrastructure. Amazon EMR is a cloud service that provides a managed Hadoop framework to process and analyze large-scale data sets. PySpark is a Python API for Spark, a distributed computing framework that can run on Hadoop. Option C also develops a python program to do model inference by leveraging the Amazon Comprehend text analysis API, which increases the development effort.

Option D is not the best design because it is identical to option A, except for the ingestion method. It still exports the data into Amazon S3 to do model inference with Amazon Comprehend, which adds an extra step and increases the operational complexity and maintenance of the infrastructure.

A company has several sites in different regions from which the company wants to ingest data.

Which of the following will enable this type of data ingestion?

The company must have a Snowflake account in each cloud region to be able to ingest data to that account.

The company must replicate data between Snowflake accounts.

The company should provision a reader account to each site and ingest the data through the reader accounts.

The company should use a storage integration for the external stage.

This is the correct answer because it allows the company to ingest data from different regions using a storage integration for the external stage. A storage integration is a feature that enables secure and easy access to files in external cloud storage from Snowflake. A storage integration can be used to create an external stage, which is a named location that references the files in the external storage. An external stage can be used to load data into Snowflake tables using the COPY INTO command, or to unload data from Snowflake tables using the COPY INTO LOCATION command. A storage integration can support multiple regions and cloud platforms, as long as the external storage service is compatible with Snowflake12.

Snowflake Documentation: Storage Integrations

Snowflake Documentation: External Stages

A healthcare company wants to share data with a medical institute. The institute is running a Standard edition of Snowflake; the healthcare company is running a Business Critical edition.

How can this data be shared?

The healthcare company will need to change the institute’s Snowflake edition in the accounts panel.

By default, sharing is supported from a Business Critical Snowflake edition to a Standard edition.

Contact Snowflake and they will execute the share request for the healthcare company.

Set the share_restriction parameter on the shared object to false.

By default, Snowflake does not allow sharing data from a Business Critical edition to a non-Business Critical edition. This is because Business Critical edition provides enhanced security and data protection features that are not available in lower editions. However, this restriction can be overridden by setting the share_restriction parameter on the shared object (database, schema, or table) to false. This parameter allows the data provider to explicitly allow sharing data with lower edition accounts. Note that this parameter can only be set by the data provider, not the data consumer. Also, setting this parameter to false may reduce the level of security and data protection for the shared data.

Enable Data Share:Business Critical Account to Lower Edition

Sharing Is Not Allowed From An Account on BUSINESS CRITICAL Edition to an Account On A Lower Edition

SQL Execution Error: Sharing is Not Allowed from an Account on BUSINESS CRITICAL Edition to an Account on a Lower Edition

Snowflake Editions | Snowflake Documentation

An Architect is using SnowCD to investigate a connectivity issue.

Which system function will provide a list of endpoints that the network must be able to access to use a specific Snowflake account, leveraging private connectivity?

SYSTEMSALLOWLIST ()

SYSTEMSGET_PRIVATELINK

SYSTEMSAUTHORIZE_PRIVATELINK

SYSTEMSALLOWLIST_PRIVATELINK ()

TheSYSTEM$GET_PRIVATELINKfunction is used to retrieve the list of Snowflake service endpoints that need to be accessible when configuring private connectivity (such as AWS PrivateLink or Azure Private Link) for a Snowflake account. The function returns information necessary for setting up the networking infrastructure that allows secure and private access to Snowflake without using the public internet. SnowCD can then be used to verify connectivity to these endpoints.

What is the MOST efficient way to design an environment where data retention is not considered critical, and customization needs are to be kept to a minimum?

Use a transient database.

Use a transient schema.

Use a transient table.

Use a temporary table.

Transient databases in Snowflake are designed for situations where data retention is not critical, and they do not have the fail-safe period that regular databases have. This means that data in a transient database is not recoverable after the Time Travel retention period. Using a transient database is efficient because it minimizes storage costs while still providing most functionalities of a standard database without the overhead of data protection features that are not needed when data retention is not a concern.

What are some of the characteristics of result set caches? (Choose three.)

Time Travel queries can be executed against the result set cache.

Snowflake persists the data results for 24 hours.

Each time persisted results for a query are used, a 24-hour retention period is reset.

The data stored in the result cache will contribute to storage costs.

The retention period can be reset for a maximum of 31 days.

The result set cache is not shared between warehouses.

In Snowflake, the characteristics of result set caches include persistence of data results for 24 hours (B), each use of persisted results resets the 24-hour retention period (C), and result set caches are not shared between different warehouses (F). The result set cache is specifically designed to avoid repeated execution of the same query within this timeframe, reducing computational overhead and speeding up query responses. These caches do not contribute to storage costs, and their retention period cannot be extended beyond the default duration nor up to 31 days, as might be misconstrued.

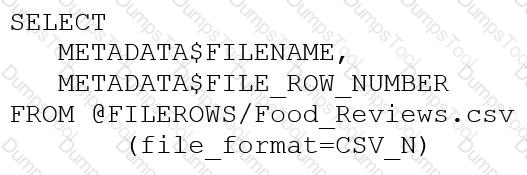

An Architect runs the following SQL query:

How can this query be interpreted?

FILEROWS is a stage. FILE_ROW_NUMBER is line number in file.

FILEROWS is the table. FILE_ROW_NUMBER is the line number in the table.

FILEROWS is a file. FILE_ROW_NUMBER is the file format location.

FILERONS is the file format location. FILE_ROW_NUMBER is a stage.

A stage is a named location in Snowflake that can store files for data loading and unloading. A stage can be internal or external, depending on where the files are stored.

The query in the question uses the LIST function to list the files in a stage named FILEROWS. The function returns a table with various columns, including FILE_ROW_NUMBER, which is the line number of the file in the stage.

Therefore, the query can be interpreted as listing the files in a stage named FILEROWS and showing the line number of each file in the stage.

Stages

LIST Function

A company’s client application supports multiple authentication methods, and is using Okta.

What is the best practice recommendation for the order of priority when applications authenticate to Snowflake?

1) OAuth (either Snowflake OAuth or External OAuth)2) External browser3) Okta native authentication4) Key Pair Authentication, mostly used for service account users5) Password

1) External browser, SSO2) Key Pair Authentication, mostly used for development environment users3) Okta native authentication4) OAuth (ether Snowflake OAuth or External OAuth)5) Password

1) Okta native authentication2) Key Pair Authentication, mostly used for production environment users3) Password4) OAuth (either Snowflake OAuth or External OAuth)5) External browser, SSO

1) Password2) Key Pair Authentication, mostly used for production environment users3) Okta native authentication4) OAuth (either Snowflake OAuth or External OAuth)5) External browser, SSO

This is the best practice recommendation for the order of priority when applications authenticate to Snowflake, according to the Snowflake documentation and the web search results. Authentication is the process of verifying the identity of a user or application that connects to Snowflake. Snowflake supports multiple authentication methods, each with different advantages and disadvantages. The recommended order of priority is based on the following factors:

Security: The authentication method should provide a high level of security and protection against unauthorized access or data breaches. The authentication method should also support multi-factor authentication (MFA) or single sign-on (SSO) for additional security.

Convenience: The authentication method should provide a smooth and easy user experience, without requiring complex or manual steps. The authentication method should also support seamless integration with external identity providers or applications.

Flexibility: The authentication method should provide a range of options and features to suit different use cases and scenarios. The authentication method should also support customization and configuration to meet specific requirements.

Based on these factors, the recommended order of priority is:

OAuth (either Snowflake OAuth or External OAuth): OAuth is an open standard for authorization that allows applications to access Snowflake resources on behalf of a user, without exposing the user’s credentials. OAuth provides a high level of security, convenience, and flexibility, as it supports MFA, SSO, token-based authentication, and various grant types and scopes. OAuth can be implemented using either Snowflake OAuth or External OAuth, depending on the identity provider and the application12.

External browser: External browser is an authentication method that allows users to log in to Snowflake using a web browser and an external identity provider, such as Okta, Azure AD, or Ping Identity. External browser provides a high level of security and convenience, as it supports MFA, SSO, and federated authentication. External browser also provides a consistent user interface and experience across different platforms and devices34.

Okta native authentication: Okta native authentication is an authentication method that allows users to log in to Snowflake using Okta as the identity provider, without using a web browser. Okta native authentication provides a high level of security and convenience, as it supports MFA, SSO, and federated authentication. Okta native authentication also provides a native user interface and experience for Okta users, and supports various Okta features, such as password policies and user management56.

Key Pair Authentication: Key Pair Authentication is an authentication method that allows users to log in to Snowflake using a public-private key pair, without using a password. Key Pair Authentication provides a high level of security, as it relies on asymmetric encryption and digital signatures. Key Pair Authentication also provides a flexible and customizable authentication option, as it supports various key formats, algorithms, and expiration times. Key Pair Authentication is mostly used for service account users, such as applications or scripts that connect to Snowflake programmatically7 .

Password: Password is the simplest and most basic authentication method that allows users to log in to Snowflake using a username and password. Password provides a low level of security, as it relies on symmetric encryption and is vulnerable to brute force attacks or phishing. Password also provides a low level of convenience and flexibility, as it requires manual input and management, and does not support MFA or SSO. Password is the least recommended authentication method, and should be used only as a last resort or for testing purposes .

Snowflake Documentation: Snowflake OAuth

Snowflake Documentation: External OAuth

Snowflake Documentation: External Browser Authentication

Snowflake Blog: How to Use External Browser Authentication with Snowflake

Snowflake Documentation: Okta Native Authentication

Snowflake Blog: How to Use Okta Native Authentication with Snowflake

Snowflake Documentation: Key Pair Authentication

[Snowflake Blog: How to Use Key Pair Authentication with Snowflake]

[Snowflake Documentation: Password Authentication]

[Snowflake Blog: How to Use Password Authentication with Snowflake]

The IT Security team has identified that there is an ongoing credential stuffing attack on many of their organization’s system.

What is the BEST way to find recent and ongoing login attempts to Snowflake?

Call the LOGIN_HISTORY Information Schema table function.

Query the LOGIN_HISTORY view in the ACCOUNT_USAGE schema in the SNOWFLAKE database.

View the History tab in the Snowflake UI and set up a filter for SQL text that contains the text "LOGIN".

View the Users section in the Account tab in the Snowflake UI and review the last login column.

This view can be used to query login attempts by Snowflake users within the last 365 days (1 year). It provides information such as the event timestamp, the user name, the client IP, the authentication method, the success or failure status, and the error code or message if the login attempt was unsuccessful. By querying this view, the IT Security team can identify any suspicious or malicious login attempts to Snowflake and take appropriate actions to prevent credential stuffing attacks1. The other options are not the best ways to find recent and ongoing login attempts to Snowflake. Option A is incorrect because the LOGIN_HISTORY Information Schema table function only returns login events within the last 7 days, which may not be sufficient to detect credential stuffing attacks that span a longer period of time2. Option C is incorrect because the History tab in the Snowflake UI only shows the queries executed by the current user or role, not the login events of other users or roles3. Option D is incorrect because the Users section in the Account tab in the Snowflake UI only shows the last login time for each user, not the details of the login attempts or the failures.

When using the COPY INTO

command with the CSV file format, how does the MATCH_BY_COLUMN_NAME parameter behave?

It expects a header to be present in the CSV file, which is matched to a case-sensitive table column name.

The parameter will be ignored.

The command will return an error.

The command will return a warning stating that the file has unmatched columns.

Comprehensive and Detailed Explanation From Exact Extract:

The MATCH_BY_COLUMN_NAME parameter in the COPY INTO

command is used to load semi-structured or structured data, such as CSV, into columns of the target table by matching column names in the data file with those in the table. For CSV files, this parameter requires specific conditions to be met, particularly the presence of a header row in the file, which is used to map columns to the target table.

According to the official Snowflake documentation, when the MATCH_BY_COLUMN_NAME parameter is used with CSV files, it is only supported in specific scenarios and requires the PARSE_HEADER file format option to be set to TRUE. This option indicates that the first row of the CSV file contains column headers, which Snowflake uses to match with the target table's column names. The matching behavior can be configured as CASE_SENSITIVE or CASE_INSENSITIVE, but the default behavior is case-sensitive unless specified otherwise.

However, there is a critical limitation when using MATCH_BY_COLUMN_NAME with CSV files: as of the latest Snowflake documentation, this feature is in Open Private Preview for CSV files and is not generally available for all accounts. When the MATCH_BY_COLUMN_NAME parameter is specified for a CSV file in an environment where this feature is not enabled, or if the PARSE_HEADER option is not set to TRUE, the COPY INTO command will return an error. This is because Snowflake cannot process the column name matching without the header parsing capability, which is not fully supported for CSV files in general availability.

The exact extract from the Snowflake documentation states:

"For loading CSV files, the MATCH_BY_COLUMN_NAME copy option is available in preview. It requires the use of the above-mentioned CSV file format option PARSE_HEADER = TRUE."

Additionally, the documentation clarifies:

"Boolean that specifies whether to use the first row headers in the data files to determine column names. This file format option is applied to the following actions only: Automatically detecting column definitions by using the INFER_SCHEMA function. Loading CSV data into separate columns by using the INFER_SCHEMA function and MATCH_BY_COLUMN_NAME copy option."

Furthermore, a known issue is noted:

"For CSV only, there is a known issue when the INCLUDE_METADATA copy option is used with MATCH_BY_COLUMN_NAME. Do not use this copy option when loading CSV files until the known issue is resolved."

Given that the MATCH_BY_COLUMN_NAME parameter is not fully supported for CSV files in general availability and requires specific preview conditions, attempting to use it without meeting those conditions, such as PARSE_HEADER = TRUE or enabling the preview feature, results in an error. Therefore, option C is correct: The command will return an error.

Option A is incorrect because, while MATCH_BY_COLUMN_NAME expects a header in the CSV file for matching when the feature is enabled, the case-sensitive matching is only true when explicitly set to CASE_SENSITIVE. Additionally, the feature's limited availability means it is not guaranteed to work without causing an error. Option B is incorrect because the parameter is not simply ignored; it triggers an error if the conditions are not met. Option D is incorrect because Snowflake does not issue a warning for unmatched columns in this context; it fails with an error when the parameter is unsupported or misconfigured.

An Architect is designing a solution that will be used to process changed records in an orders table. Newly-inserted orders must be loaded into the f_orders fact table, which will aggregate all the orders by multiple dimensions (time, region, channel, etc.). Existing orders can be updated by the sales department within 30 days after the order creation. In case of an order update, the solution must perform two actions:

1. Update the order in the f_0RDERS fact table.

2. Load the changed order data into the special table ORDER _REPAIRS.

This table is used by the Accounting department once a month. If the order has been changed, the Accounting team needs to know the latest details and perform the necessary actions based on the data in the order_repairs table.

What data processing logic design will be the MOST performant?

Useone stream and one task.

Useone stream and two tasks.

Usetwo streams and one task.

Usetwo streams and two tasks.

The most performant design for processing changed records, considering the need to both update records in thef_ordersfact table and load changes into theorder_repairstable, is to use one stream and two tasks. The stream will monitor changes in the orders table, capturing both inserts and updates. The first task would apply these changes to thef_ordersfact table, ensuring all dimensions are accurately represented. The second task would use the same stream to insert relevant changes into theorder_repairstable, which is critical for the Accounting department's monthly review. This method ensures efficient processing by minimizing the overhead of managing multiple streams and synchronizing between them, while also allowing specific tasks to optimize for their target operations.

Which Snowflake architecture recommendation needs multiple Snowflake accounts for implementation?

Enable a disaster recovery strategy across multiple cloud providers.

Create external stages pointing to cloud providers and regions other than the region hosting the Snowflake account.

Enable zero-copy cloning among the development, test, and production environments.

Enable separation of the development, test, and production environments.

The Snowflake architecture recommendation that necessitates multiple Snowflake accounts for implementation is the separation of development, test, and production environments. This approach, known as Account per Tenant (APT), isolates tenants into separate Snowflake accounts, ensuring dedicated resources and security isolation12.

References

•Snowflake’s white paper on “Design Patterns for Building Multi-Tenant Applications on Snowflake” discusses the APT model and its requirement for separate Snowflake accounts for each tenant1.

•Snowflake Documentation on Secure Data Sharing, which mentions the possibility of sharing data across multiple accounts3.

A global company needs to securely share its sales and Inventory data with a vendor using a Snowflake account.

The company has its Snowflake account In the AWS eu-west 2 Europe (London) region. The vendor's Snowflake account Is on the Azure platform in the West Europe region. How should the company's Architect configure the data share?

1. Create a share.2. Add objects to the share.3. Add a consumer account to the share for the vendor to access.

1. Create a share.2. Create a reader account for the vendor to use.3. Add the reader account to the share.

1. Create a new role called db_share.2. Grant the db_share role privileges to read data from the company database and schema.3. Create a user for the vendor.4. Grant the ds_share role to the vendor's users.

1. Promote an existing database in the company's local account to primary.2. Replicate the database to Snowflake on Azure in the West-Europe region.3. Create a share and add objects to the share.4. Add a consumer account to the share for the vendor to access.

The correct way to securely share data with a vendor using a Snowflake account on a different cloud platform and region is to create a share, add objects to the share, and add a consumer account to the share for the vendor to access. This way, the company can control what data is shared, who can access it, and how long the share is valid. The vendor can then query the shared data without copying or moving it to their own account. The other options are either incorrect or inefficient, as they involve creating unnecessary reader accounts, users, roles, or database replication.

https://learn.snowflake.com/en/certifications/snowpro-advanced-architect/

A company is using Snowflake in Azure in the Netherlands. The company analyst team also has data in JSON format that is stored in an Amazon S3 bucket in the AWS Singapore region that the team wants to analyze.

The Architect has been given the following requirements:

1. Provide access to frequently changing data

2. Keep egress costs to a minimum

3. Maintain low latency

How can these requirements be met with the LEAST amount of operational overhead?

Use a materialized view on top of an external table against the S3 bucket in AWS Singapore.

Use an external table against the S3 bucket in AWS Singapore and copy the data into transient tables.

Copy the data between providers from S3 to Azure Blob storage to collocate, then use Snowpipe for data ingestion.

Use AWS Transfer Family to replicate data between the S3 bucket in AWS Singapore and an Azure Netherlands Blob storage, then use an external table against the Blob storage.

Option A is the best design to meet the requirements because it uses a materialized view on top of an external table against the S3 bucket in AWS Singapore. A materialized view is a database object that contains the results of a query and can be refreshed periodically to reflect changes in the underlying data1. An external table is a table that references data files stored in a cloud storage service, such as Amazon S32. By using a materialized view on top of an external table, the company can provide access to frequently changing data, keep egress costs to a minimum, and maintain low latency. This is because the materialized view will cache the query results in Snowflake, reducing the need to access the external data files and incur network charges. The materialized view will also improve the query performance by avoiding scanning the external data files every time. The materialized view can be refreshed on a schedule or on demand to capture the changes in the external data files1.

Option B is not the best design because it uses an external table against the S3 bucket in AWS Singapore and copies the data into transient tables. A transient table is a tablethat is not subject to the Time Travel and Fail-safe features of Snowflake, and is automatically purged after a period of time3. By using an external table and copying the data into transient tables, the company will incur more egress costs and operational overhead than using a materialized view. This is because the external table will access the external data files every time a query is executed, and the copy operation will also transfer data from S3 to Snowflake. The transient tables will also consume more storage space in Snowflake and require manual maintenance to ensure they are up to date.

Option C is not the best design because it copies the data between providers from S3 to Azure Blob storage to collocate, then uses Snowpipe for data ingestion. Snowpipe is a service that automates the loading of data from external sources into Snowflake tables4. By copying the data between providers, the company will incur high egress costs and latency, as well as operational complexity and maintenance of the infrastructure. Snowpipe will also add another layer of processing and storage in Snowflake, which may not be necessary if the external data files are already in a queryable format.

Option D is not the best design because it uses AWS Transfer Family to replicate data between the S3 bucket in AWS Singapore and an Azure Netherlands Blob storage, then uses an external table against the Blob storage. AWS Transfer Family is a service that enables secure and seamless transfer of files over SFTP, FTPS, and FTP to and from Amazon S3 or Amazon EFS5. By using AWS Transfer Family, the company will incur high egress costs and latency, as well as operational complexity and maintenance of the infrastructure. The external table will also access the external data files every time a query is executed, which may affect the query performance.

A company wants to deploy its Snowflake accounts inside its corporate network with no visibility on the internet. The company is using a VPN infrastructure and Virtual Desktop Infrastructure (VDI) for its Snowflake users. The company also wants to re-use the login credentials set up for the VDI to eliminate redundancy when managing logins.

What Snowflake functionality should be used to meet these requirements? (Choose two.)

Set up replication to allow users to connect from outside the company VPN.

Provision a unique company Tri-Secret Secure key.

Use private connectivity from a cloud provider.

Set up SSO for federated authentication.

Use a proxy Snowflake account outside the VPN, enabling client redirect for user logins.

According to the SnowPro Advanced: Architect documents and learning resources, the Snowflake functionality that should be used to meet these requirements are:

Use private connectivity from a cloud provider. This feature allows customers to connect to Snowflake from their own private network without exposing their data to the public Internet. Snowflake integrates with AWS PrivateLink, Azure Private Link, and Google Cloud Private Service Connect to offer private connectivity from customers’ VPCs or VNets to Snowflake endpoints. Customers can control how traffic reaches the Snowflake endpoint and avoid the need for proxies or public IP addresses123.

Set up SSO for federated authentication. This feature allows customers to use their existing identity provider (IdP) to authenticate users for SSO access to Snowflake. Snowflake supports most SAML 2.0-compliant vendors as an IdP, including Okta, Microsoft AD FS, Google G Suite, Microsoft Azure Active Directory, OneLogin, Ping Identity, and PingOne. By setting up SSO for federated authentication, customers can leverage their existing user credentials and profile information, and provide stronger security than username/password authentication4.

The other options are incorrect because they do not meet the requirements or are not feasible. Option A is incorrect because setting up replication does not allow users to connect from outside the company VPN. Replication is a feature of Snowflake that enables copying databases across accounts in different regions and cloud platforms. Replication does not affect the connectivity or visibility of the accounts5. Option B is incorrect because provisioning a unique company Tri-Secret Secure key does not affect the network or authentication requirements. Tri-Secret Secure is a feature of Snowflake that allows customers to manage their own encryption keys for data at rest in Snowflake, using a combination of three secrets: a master key, a service key, and a security password. Tri-Secret Secure provides an additional layer of security and control over the data encryption and decryption process, but it does not enable private connectivity or SSO6. Option E is incorrect because using a proxy Snowflake account outside the VPN, enabling client redirect for user logins, is not a supported or recommended way of meeting the requirements. Client redirect is a feature of Snowflake that allows customers to connect to a different Snowflake account than the one specified in the connection string. This feature is useful for scenarios such as cross-region failover, data sharing, and account migration, but it does not provide private connectivity or SSO7. References: AWS PrivateLink & Snowflake | Snowflake Documentation, Azure Private Link & Snowflake | Snowflake Documentation, Google Cloud Private Service Connect & Snowflake | Snowflake Documentation, Overview of Federated Authentication and SSO | Snowflake Documentation, Replicating Databases Across Multiple Accounts | Snowflake Documentation, Tri-Secret Secure | Snowflake Documentation, Redirecting Client Connections | Snowflake Documentation

An Architect is troubleshooting a query with poor performance using the QUERY function. The Architect observes that the COMPILATION_TIME Is greater than the EXECUTION_TIME.

What is the reason for this?

The query is processing a very large dataset.

The query has overly complex logic.

The query Is queued for execution.

The query Is reading from remote storage

The correct answer is B because the compilation time is the time it takes for the optimizer to create an optimal query plan for the efficient execution of the query. The compilation time depends on the complexity of the query, such as the number of tables, columns, joins, filters, aggregations, subqueries, etc. The more complex the query, the longer it takes to compile.

Option A is incorrect because the query processing time is not affected by the size of the dataset, but by the size of the virtual warehouse. Snowflake automatically scales the compute resources to match the data volume and parallelizes the query execution. The size of the dataset may affect the execution time, but not the compilation time.

Option C is incorrect because the query queue time is not part of the compilation time or the execution time. It is a separate metric that indicates how long the query waits for a warehouse slot before it starts running. The query queue time depends on the warehouse load, concurrency, and priority settings.

Option D is incorrect because the query remote IO time is not part of the compilation time or the execution time. It is a separate metric that indicates how long the query spends reading data from remote storage, such as S3 or Azure Blob Storage. The query remote IO time depends on the network latency, bandwidth, and caching efficiency. References:

Understanding Why Compilation Time in Snowflake Can Be Higher than Execution Time: This article explains why the total duration (compilation + execution) time is an essential metric to measure query performance in Snowflake. It discusses the reasons for the long compilation time, including query complexity and the number of tables and columns.

Exploring Execution Times: This document explains how to examine the past performance of queries and tasks using Snowsight or by writing queries against views in the ACCOUNT_USAGE schema. It also describes the different metrics and dimensions that affect query performance, such as duration, compilation, execution, queue, and remote IO time.

What is the “compilation time” and how to optimize it?: This community post provides some tips and best practices on how to reduce the compilation time, such as simplifying the query logic, using views or common table expressions, and avoiding unnecessary columns or joins.

A large manufacturing company runs a dozen individual Snowflake accounts across its business divisions. The company wants to increase the level of data sharing to support supply chain optimizations and increase its purchasing leverage with multiple vendors.

The company’s Snowflake Architects need to design a solution that would allow the business divisions to decide what to share, while minimizing the level of effort spent on configuration and management. Most of the company divisions use Snowflake accounts in the same cloud deployments with a few exceptions for European-based divisions.

According to Snowflake recommended best practice, how should these requirements be met?

Migrate the European accounts in the global region and manage shares in a connected graph architecture. Deploy a Data Exchange.

Deploy a Private Data Exchange in combination with data shares for the European accounts.

Deploy to the Snowflake Marketplace making sure that invoker_share() is used in all secure views.

Deploy a Private Data Exchange and use replication to allow European data shares in the Exchange.

According to Snowflake recommended best practice, the requirements of the large manufacturing company should be met by deploying a Private Data Exchange in combination with data shares for the European accounts. A Private Data Exchange is a feature of the Snowflake Data Cloud platform that enables secure and governed sharing of data between organizations. It allows Snowflake customers to create their own data hub and invite other parts of their organization or external partners to access and contribute data sets. A Private Data Exchange provides centralized management, granular access control, and data usage metrics for the data shared in the exchange1. A data share is a secure and direct way of sharing data between Snowflake accounts without having to copy or move the data. A data share allows the data provider to grant privileges on selected objects in their account to one or more data consumers in other accounts2. By using a Private Data Exchange in combination with data shares, the company can achieve the following benefits:

The business divisions can decide what data to share and publish it to the Private Data Exchange, where it can be discovered and accessed by other members of the exchange. This reduces the effort and complexity of managing multiple data sharing relationships and configurations.

The company can leverage the existing Snowflake accounts in the same cloud deployments to create the Private Data Exchange and invite the members to join. This minimizes the migration and setup costs and leverages the existing Snowflake features and security.

The company can use data shares to share data with the European accounts that are in different regions or cloud platforms. This allows the company to comply with the regional and regulatory requirements for data sovereignty and privacy, while still enabling data collaboration across the organization.

The company can use the Snowflake Data Cloud platform to perform data analysis and transformation on the shared data, as well as integrate with other data sources and applications. This enables the company to optimize its supply chain and increase its purchasing leverage with multiple vendors.

At which object type level can the APPLY MASKING POLICY, APPLY ROW ACCESS POLICY and APPLY SESSION POLICY privileges be granted?

Global

Database

Schema

Table

The object type level at which the APPLY MASKING POLICY, APPLY ROW ACCESS POLICY and APPLY SESSION POLICY privileges can be granted is global. These are account-level privileges that control who can apply or unset these policies on objects such as columns, tables, views, accounts, or users. These privileges are granted to the ACCOUNTADMIN role by default, and can be granted to other roles as needed. The other options are incorrect because they are not the object type level at which these privileges can be granted. Database, schema, and table are lower-level object types that do not support these privileges. References: Access Control Privileges | Snowflake Documentation, Using Dynamic Data Masking | Snowflake Documentation, Using Row Access Policies | Snowflake Documentation, Using Session Policies | Snowflake Documentation

A company's Architect needs to find an efficient way to get data from an external partner, who is also a Snowflake user. The current solution is based on daily JSON extracts that are placed on an FTP server and uploaded to Snowflake manually. The files are changed several times each month, and the ingestion process needs to be adapted to accommodate these changes.

What would be the MOST efficient solution?

Ask the partner to create a share and add the company's account.

Ask the partner to use the data lake export feature and place the data into cloud storage where Snowflake can natively ingest it (schema-on-read).

Keep the current structure but request that the partner stop changing files, instead only appending new files.

Ask the partner to set up a Snowflake reader account and use that account to get the data for ingestion.

The most efficient solution is to ask the partner to create a share and add the company’s account (Option A). This way, the company can access the live data from the partner without any data movement or manual intervention. Snowflake’s secure data sharing feature allows data providers to share selected objects in a database with other Snowflake accounts. The shared data is read-only and does not incur any storage or compute costs for the data consumers. The data consumers can query the shared data directly or create local copies of the shared objects in their own databases. Option B is not efficient because it involves using the data lake export feature, which is intended for exporting data from Snowflake to an external data lake, not for importing data from another Snowflake account. The data lake export feature also requires the data provider to create an external stage on cloud storage and use the COPY INTO

Introduction to Secure Data Sharing | Snowflake Documentation

Data Lake Export Public Preview Is Now Available on Snowflake | Snowflake Blog

Managing Reader Accounts | Snowflake Documentation

Which feature provides the capability to define an alternate cluster key for a table with an existing cluster key?

External table

Materialized view

Search optimization

Result cache

A materialized view is a feature that provides the capability to define an alternate cluster key for a table with an existing cluster key. A materialized view is a pre-computed result set that is stored in Snowflake and can be queried like a regular table. A materialized view can have a different cluster key than the base table, which can improve the performance and efficiency of queries on the materialized view. A materialized view can also support aggregations, joins, and filters on the base table data. A materialized view is automatically refreshed when the underlying data in the base table changes, as long as the AUTO_REFRESH parameter is set to true1.

Materialized Views | Snowflake Documentation

A Data Engineer is designing a near real-time ingestion pipeline for a retail company to ingest event logs into Snowflake to derive insights. A Snowflake Architect is asked to define security best practices to configure access control privileges for the data load for auto-ingest to Snowpipe.

What are the MINIMUM object privileges required for the Snowpipe user to execute Snowpipe?

OWNERSHIP on the named pipe, USAGE on the named stage, target database, and schema, and INSERT and SELECT on the target table

OWNERSHIP on the named pipe, USAGE and READ on the named stage, USAGE on the target database and schema, and INSERT end SELECT on the target table

CREATE on the named pipe, USAGE and READ on the named stage, USAGE on the target database and schema, and INSERT end SELECT on the target table

USAGE on the named pipe, named stage, target database, and schema, and INSERT and SELECT on the target table

According to the SnowPro Advanced: Architect documents and learning resources, the minimum object privileges required for the Snowpipe user to execute Snowpipe are:

OWNERSHIP on the named pipe. This privilege allows the Snowpipe user to create, modify, and drop the pipe object that defines the COPY statement for loading data from the stage to the table1.

USAGE and READ on the named stage. These privileges allow the Snowpipe user to access and read the data files from the stage that are loaded by Snowpipe2.

USAGE on the target database and schema. These privileges allow the Snowpipe user to access the database and schema that contain the target table3.

INSERT and SELECT on the target table. These privileges allow the Snowpipe user to insert data into the table and select data from the table4.

The other options are incorrect because they do not specify the minimum object privileges required for the Snowpipe user to execute Snowpipe. Option A is incorrect because it does not include the READ privilege on the named stage, which is required for the Snowpipe user to read the data files from the stage. Option C is incorrect because it does not include the OWNERSHIP privilege on the named pipe, which is required for the Snowpipe user to create, modify, and drop the pipe object. Option D is incorrect because it does not include the OWNERSHIP privilege on the named pipe or the READ privilege on the named stage, which are both required for the Snowpipe user to execute Snowpipe. References: CREATE PIPE | Snowflake Documentation, CREATE STAGE | Snowflake Documentation, CREATE DATABASE | Snowflake Documentation, CREATE TABLE | Snowflake Documentation

A user has activated primary and secondary roles for a session.

What operation is the user prohibited from using as part of SQL actions in Snowflake using the secondary role?

Insert

Create

Delete

Truncate

In Snowflake, when a user activates a secondary role during a session, certain privileges associated with DDL (Data Definition Language) operations are restricted. TheCREATEstatement, which falls under DDL operations, cannot be executed using a secondary role. This limitation is designed to enforce role-based access control and ensure that schema modifications are managed carefully, typically reserved for primary roles that have explicit permissions to modify database structures.

A company has a source system that provides JSON records for various loT operations. The JSON Is loading directly into a persistent table with a variant field. The data Is quickly growing to 100s of millions of records and performance to becoming an issue. There is a generic access pattern that Is used to filter on the create_date key within the variant field.

What can be done to improve performance?

Alter the target table to Include additional fields pulled from the JSON records. This would Include a create_date field with a datatype of time stamp. When this field Is used in the filter, partition pruning will occur.

Alter the target table to include additional fields pulled from the JSON records. This would include a create_date field with a datatype of varchar. When this field is used in the filter, partition pruning will occur.

Validate the size of the warehouse being used. If the record count is approaching 100s of millions, size XL will be the minimum size required to process this amount of data.

Incorporate the use of multiple tables partitioned by date ranges. When a user or process needs to query a particular date range, ensure the appropriate base table Is used.

The correct answer is A because it improves the performance of queries by reducing the amount of data scanned and processed. By adding a create_date field with a timestamp data type, Snowflake can automatically cluster the table based on this field and prune the micro-partitions that do not match the filter condition. This avoids the need to parse the JSON data and access the variant field for every record.

Option B is incorrect because it does not improve the performance of queries. By adding a create_date field with a varchar data type, Snowflake cannot automatically cluster the table based on this field and prune the micro-partitions that do not match the filter condition. This still requires parsing the JSON data and accessing the variant field for every record.

Option C is incorrect because it does not address the root cause of the performance issue. By validating the size of the warehouse being used, Snowflake can adjust the compute resources to match the data volume and parallelize the query execution. However, this does not reduce the amount of data scanned and processed, which is the main bottleneck for queries on JSON data.

Option D is incorrect because it adds unnecessary complexity and overhead to the data loading and querying process. By incorporating the use of multiple tables partitioned by date ranges, Snowflake can reduce the amount of data scanned and processed for queries that specify a date range. However, this requires creating and maintaining multiple tables, loading data into the appropriate table based on the date, and joining the tables for queries that span multiple date ranges. References:

Snowflake Documentation: Loading Data Using Snowpipe: This document explains how to use Snowpipe to continuously load data from external sources into Snowflake tables. It also describes the syntax and usage of the COPY INTO command, which supports various options and parameters to control the loading behavior, such as ON_ERROR, PURGE, and SKIP_FILE.

Snowflake Documentation: Date and Time Data Types and Functions: This document explains the different data types and functions for working with date and time values in Snowflake. It also describes how to set and change the session timezone and the system timezone.

Snowflake Documentation: Querying Metadata: This document explains how to query the metadata of the objects and operations in Snowflake using various functions, views, and tables. It also describes how to access the copy history information using the COPY_HISTORY function or the COPY_HISTORY view.

Snowflake Documentation: Loading JSON Data: This document explains how to load JSON data into Snowflake tables using various methods, such as the COPY INTO command, the INSERT command, or the PUT command. It also describes how to access and query JSON data using the dot notation, the FLATTEN function, or the LATERAL join.

Snowflake Documentation: Optimizing Storage for Performance: This document explains how to optimize the storage of data in Snowflake tables to improve the performance of queries. It also describes the concepts and benefits of automatic clustering, search optimization service, and materialized views.

A company needs to have the following features available in its Snowflake account:

1. Support for Multi-Factor Authentication (MFA)

2. A minimum of 2 months of Time Travel availability

3. Database replication in between different regions

4. Native support for JDBC and ODBC

5. Customer-managed encryption keys using Tri-Secret Secure

6. Support for Payment Card Industry Data Security Standards (PCI DSS)

In order to provide all the listed services, what is the MINIMUM Snowflake edition that should be selected during account creation?

Standard

Enterprise

Business Critical

Virtual Private Snowflake (VPS)

According to the Snowflake documentation1, the Business Critical edition offers the following features that are relevant to the question:

Support for Multi-Factor Authentication (MFA): This is a standard feature available in all Snowflake editions1.

A minimum of 2 months of Time Travel availability: This is an enterprise feature that allows users to access historical data for up to 90 days1.

Database replication in between different regions: This is an enterprise feature that enables users to replicate databases across different regions or cloud platforms1.

Native support for JDBC and ODBC: This is a standard feature available in all Snowflake editions1.

Customer-managed encryption keys using Tri-Secret Secure: This is a business critical feature that provides enhanced security and data protection by allowing customers to manage their own encryption keys1.

Support for Payment Card Industry Data Security Standards (PCI DSS): This is a business critical feature that ensures compliance with PCI DSS regulations for handling sensitive cardholder data1.

Therefore, the minimum Snowflake edition that should be selected during account creation to provide all the listed services is the Business Critical edition.

An Architect needs to meet a company requirement to ingest files from the company's AWS storage accounts into the company's Snowflake Google Cloud Platform (GCP) account. How can the ingestion of these files into the company's Snowflake account be initiated? (Select TWO).

Configure the client application to call the Snowpipe REST endpoint when new files have arrived in Amazon S3 storage.

Configure the client application to call the Snowpipe REST endpoint when new files have arrived in Amazon S3 Glacier storage.

Create an AWS Lambda function to call the Snowpipe REST endpoint when new files have arrived in Amazon S3 storage.

Configure AWS Simple Notification Service (SNS) to notify Snowpipe when new files have arrived in Amazon S3 storage.

Configure the client application to issue a COPY INTO