HOTSPOT

You need to troubleshoot the ad-hoc query issue.

How should you complete the statement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to schedule the population of the medallion layers to meet the technical requirements.

What should you do?

You need to ensure that processes for the bronze and silver layers run in isolation How should you configure the Apache Spark settings?

You need to resolve the sales data issue. The solution must minimize the amount of data transferred.

What should you do?

You need to implement the solution for the book reviews.

Which should you do?

What should you do to optimize the query experience for the business users?

You have a Fabric workspace that contains a lakehouse named Lakehouse1.

In an external data source, you have data files that are 500 GB each. A new file is added every day.

You need to ingest the data into Lakehouse1 without applying any transformations. The solution must meet the following requirements

Trigger the process when a new file is added.

Provide the highest throughput.

Which type of item should you use to ingest the data?

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

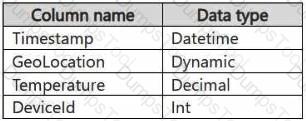

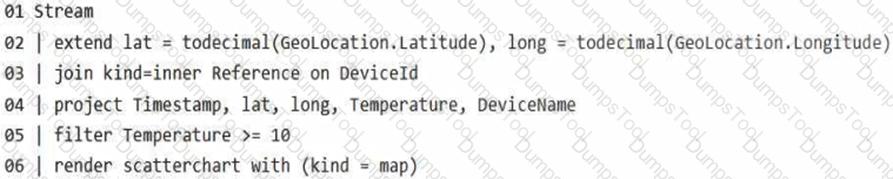

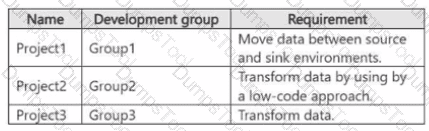

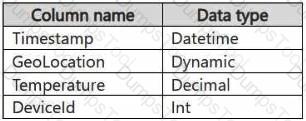

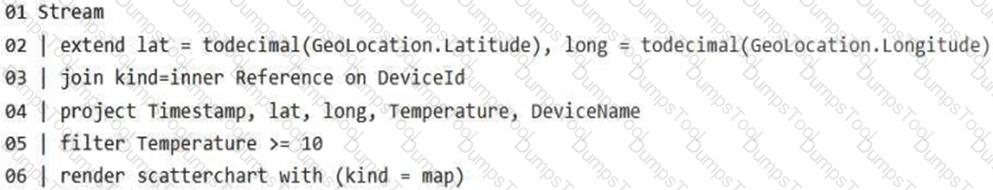

You have a KQL database that contains two tables named Stream and Reference. Stream contains streaming data in the following format.

Reference contains reference data in the following format.

Both tables contain millions of rows.

You have the following KQL queryset.

You need to reduce how long it takes to run the KQL queryset.

Solution: You change project to extend.

Does this meet the goal?

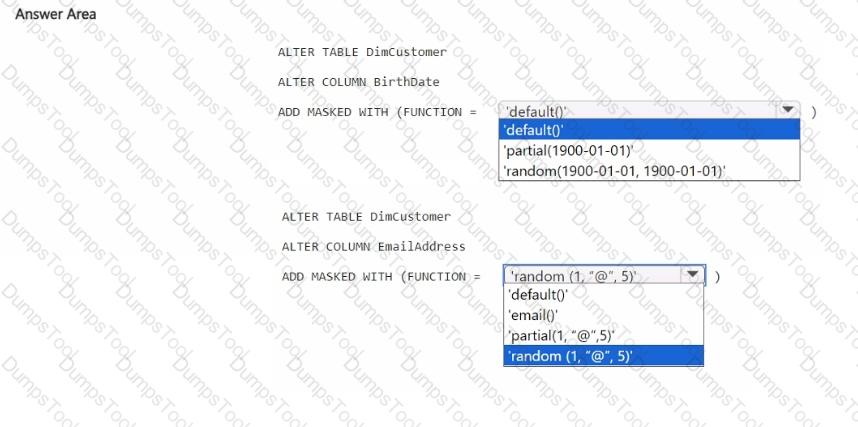

You have a Fabric workspace that contains a warehouse named Warehouse!. Warehousel contains a table named DimCustomers. DimCustomers contains the following columns:

• CustomerName

• CustomerlD

• BirthDate

You need to configure security to meet the following requirements:

• BirthDate in DimCustomer must be masked and display 1900-01-01.

• Email in DimCustomer must be masked and display only the first leading character and the last five characters.

How should you complete the statement? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

You have a Fabric workspace that contains a warehouse named Warehouse1.

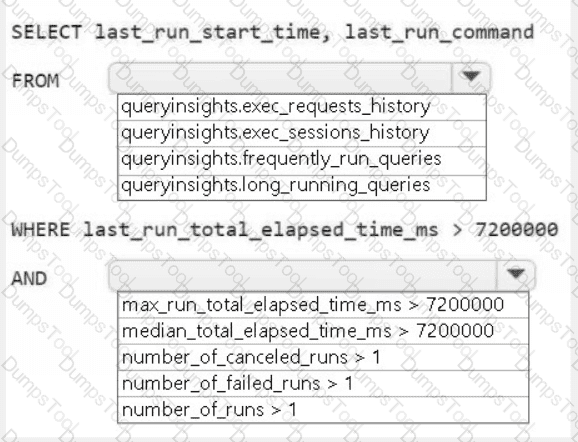

While monitoring Warehouse1, you discover that query performance has degraded during the last 60 minutes.

You need to isolate all the queries that were run during the last 60 minutes. The results must include the username of the users that submitted the queries and the query statements. What should you use?

You have a Fabric workspace that contains a warehouse named Warehouse1.

You have an on-premises Microsoft SQL Server database named Database1 that is accessed by using an on-premises data gateway.

You need to copy data from Database1 to Warehouse1.

Which item should you use?

You have a Fabric workspace that contains a warehouse named DW1. DW1 is loaded by using a notebook named Notebook1.

You need to identify which version of Delta was used when Notebook1 was executed.

What should you use?

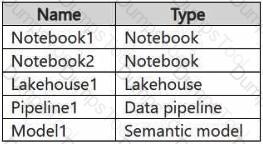

You have a Fabric workspace named Workspace1 that contains the items shown in the following table.

For Model1, the Keep your Direct Lake data up to date option is disabled.

You need to configure the execution of the items to meet the following requirements:

Notebook1 must execute every weekday at 8:00 AM.

Notebook2 must execute when a file is saved to an Azure Blob Storage container.

Model1 must refresh when Notebook1 has executed successfully.

How should you orchestrate each item? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

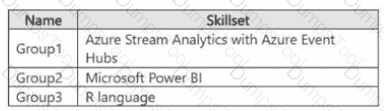

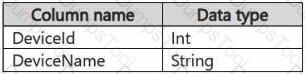

You have the development groups shown in the following table.

You have the projects shown in the following table.

You need to recommend which Fabric item to use based on each development group's skillset The solution must meet the project requirements and minimize development effort

What should you recommend for each group? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

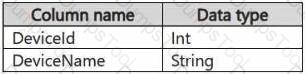

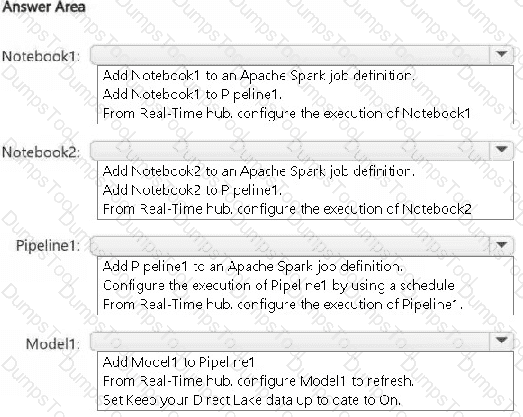

You have a KQL database that contains two tables named Stream and Reference. Stream contains streaming data in the following format.

Reference contains reference data in the following format.

Both tables contain millions of rows.

You have the following KQL queryset.

You need to reduce how long it takes to run the KQL queryset.

Solution: You change the join type to kind=outer.

Does this meet the goal?

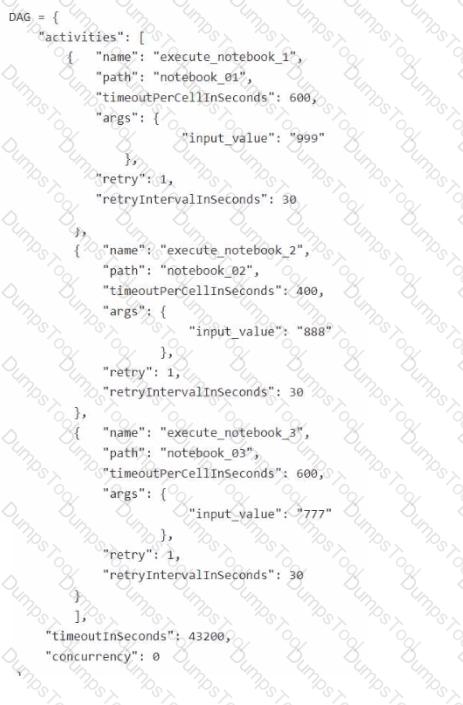

You are building a Fabric notebook named MasterNotebookl in a workspace. MasterNotebookl contains the following code.

You need to ensure that the notebooks are executed in the following sequence:

1. Notebook_03

2. Notebook.Ol

3. Notebook_02

Which two actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

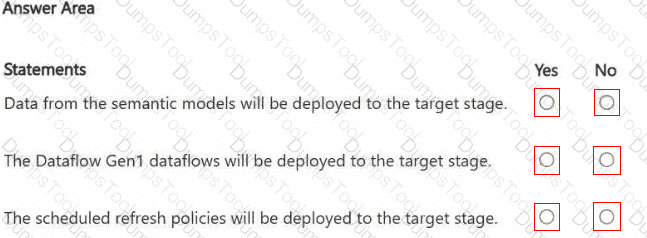

HOTSPOT

You have a Fabric workspace named Workspace1_DEV that contains the following items:

10 reports

Four notebooks

Three lakehouses

Two data pipelines

Two Dataflow Gen1 dataflows

Three Dataflow Gen2 dataflows

Five semantic models that each has a scheduled refresh policy

You create a deployment pipeline named Pipeline1 to move items from Workspace1_DEV to a new workspace named Workspace1_TEST.

You deploy all the items from Workspace1_DEV to Workspace1_TEST.

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.