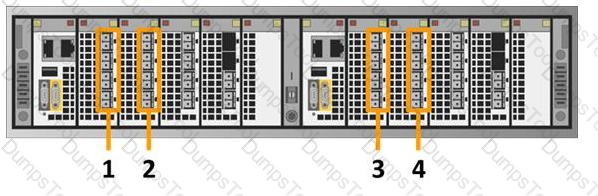

Refer to the exhibit.

A storage administrator is experiencing an unplanned site outage. After recovery of the network, they are still unable to access the management server.

Which port on the back of the management server can the administrator connect to with a hardcoded IP address to access the VPLEX cluster and troubleshoot the issue?

C

A

D

B

In the event of an unplanned site outage and subsequent recovery, if a storage administrator is unable to access the management server, they can connect to a specific port on the back of the management server with a hardcoded IP address to regain access to the VPLEX cluster and begin troubleshooting.

Identify the Management Port: The management server typically has a dedicated port that can be used for direct access in case of network issues. This port is designed to accept a hardcoded IP address for such scenarios1.

Connect to the Management Port: The administrator should use an Ethernet cable to connect a laptop or a computer directly to the management port, which is usually labeled and can be identified based on the server’s hardware manual or documentation1.

Configure the Hardcoded IP Address: On the connected device, the administrator needs to configure a static IP address that is in the same subnet as the management server’s default IP range1.

Access the VPLEX CLI: Once the connection is established, the administrator can use SSH or a similar protocol to access the VPLEX Command Line Interface (CLI) through the hardcoded IP address1.

Troubleshoot the Issue: With access to the VPLEX CLI, the administrator can now run diagnostic commands, check the system status, and troubleshoot the connectivity issue1.

In this scenario, the correct port to connect to is labeled ‘A’ on the back of the management server. This port is reserved for such direct access and allows the administrator to bypass the network to troubleshoot and resolve the issue.

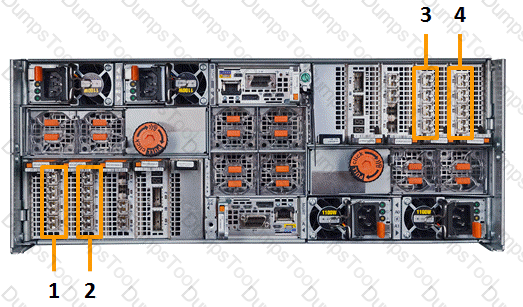

Which number in the exhibit highlights the Director-B front-end ports?

4

2

1

3

In a VPLEX system, each director module has front-end (FE) and back-end (BE) ports for connectivity. The FE ports are used to connect to hosts or out-of-fabric services such as management networks. Based on standard configurations and assuming that Director-A and Director-B are mirrored in layout, the number that highlights the Director-B front-end ports is typically 21.

Director Modules: VPLEX systems consist of director modules, each containing ports designated for specific functions. Director-B is one of these modules1.

Front-End Ports: The front-end ports on Director-B are used for host connectivity and are essential for the operation of the VPLEX system1.

Port Identification: During the installation and setup of a VPLEX system, correctly identifying and utilizing the FE ports is crucial. This includes connecting the VPLEX to the host environment and ensuring proper communication between the storage system and the hosts1.

Documentation Reference: For precise identification and configuration of the FE ports on Director-B, the Dell VPLEX Deploy Achievement documents provide detailed instructions and diagrams1.

Best Practices: It is recommended to follow the guidelines provided in the Dell VPLEX documentation for port identification and installation utilities to ensure correct setup and configuration of the VPLEX system1.

In summary, the number 2 in the exhibit corresponds to the Director-B front-end ports in a Dell VPLEX system, which are critical for host connectivity and system operation.

When are the front-end ports enabled during a VPLEX installation?

Before launching the VPLEX EZ-Setup wizard

Before creating the metadata volumes and backup

After exposing the storage to the hosts

After creating the metadata volumes and backup

During a VPLEX installation, the front-end ports are enabled after the metadata volumes and backup have been created. This sequence ensures that the system’s metadata, which is crucial for the operation of VPLEX, is secured before the storage is exposed to the hosts.

Metadata Volumes Creation: The first step in the VPLEX installation process involves creating metadata volumes. These volumes store configuration and operational data necessary for VPLEX to manage the virtualized storage environment1.

Metadata Backup: After the metadata volumes are created, it is essential to back up this data. The backup serves as a safeguard against data loss and is a critical step before enabling the front-end ports1.

Enabling Front-End Ports: Once the metadata is secured, the front-end ports can be enabled. These ports are used for host connectivity, allowing hosts to access the virtual volumes presented by VPLEX1.

Exposing Storage to Hosts: With the front-end ports enabled, the storage can then be exposed to the hosts. This step involves presenting the virtual volumes to the hosts through the front-end ports1.

Final Configuration: The final configuration steps may include zoning, LUN masking, and setting up host access to the VPLEX virtual volumes. These steps are completed after the front-end ports are enabled and the storage is exposed1.

In summary, the front-end ports are enabled during a VPLEX installation after the metadata volumes and backup have been created, which is reflected in option D. This ensures that the system metadata is protected and available before the storage is made accessible to the hosts.

What must be done after deploying Cluster Witness?

Complete the VPLEX Metro setup

Configure Cluster Witness CLI context

Configure 2-way VPN

Configure consistency groups

After deploying the VPLEX Cluster Witness, the next step is to configure a 2-way VPN between the Witness and both VPLEX sites. This is essential for the Cluster Witness to communicate with each cluster and provide the health check heartbeats required for monitoring.

VPN Configuration: Configure the VPN to ensure secure communication between the VPLEX Cluster Witness and the management servers of both VPLEX clusters1.

Enable Cluster Witness: Once the VPN is configured, enable the VPLEX Cluster Witness on the VPLEX sites. This involves setting up the Cluster Witness to monitor the health and status of the VPLEX clusters1.

Test Connectivity: After enabling the Cluster Witness, test the connectivity between the Cluster Witness and the VPLEX clusters to ensure that the health check heartbeats are being transmitted and received correctly1.

Finalize Setup: Complete any additional setup tasks as required by the VPLEX Metro configuration, such as configuring consistency groups or completing the VPLEX Metro setup1.

Documentation Reference: For detailed instructions and best practices, refer to the Dell VPLEX Deploy Achievement documents, which provide comprehensive guidance on deploying and configuring the VPLEX Cluster Witness1.

In summary, configuring a 2-way VPN is a critical step after deploying the VPLEX Cluster Witness to ensure it can perform its monitoring role effectively within the VPLEX Metro environment.

Which number in the exhibit highlights the Director-B back-end ports?

3

4

2

1

To identify the Director-B back-end ports in a VPLEX system, one must understand the standard port numbering and layout for VPLEX directors. Based on the information provided in the Dell community forum1, the back-end ports for Director-B can be identified by the following method:

Director Identification: Determine which director is Director-B. In a VPLEX system, directors are typically labeled as A or B, and each has a set of front-end and back-end ports1.

Port Numbering: The port numbering for a VPLEX director follows a specific pattern. For example, in a VS2 system, the back-end ports are typically numbered starting from 10 onwards, following the front-end ports which are numbered from 001.

Back-End Ports: Based on the standard VPLEX port numbering, the back-end ports for Director-B would be the second set of ports after the front-end ports. This is because the front-end ports are used for host connectivity, while the back-end ports connect to the storage arrays1.

Exhibit Analysis: In the exhibit provided, if the numbering follows the standard VPLEX layout, number 4 would highlight the Director-B back-end ports, assuming that number 3 highlights the front-end ports and the numbering continues sequentially1.

Verification: To verify the correct identification of the back-end ports, one can refer to the official Dell VPLEX documentation or use the VPLEX CLI to list the ports and their roles within the system1.

In summary, based on the standard layout and numbering of VPLEX systems, number 4 in the exhibit likely highlights the Director-B back-end ports. This identification is crucial for proper configuration and management of the VPLEX system.

What condition would prevent volume expansion?

Logging volume in re-synchronization state

Metadata volume being backed up

Rebuild currently occurring on the volume

Volume not belonging to a consistency group

In the context of Dell VPLEX, a rebuild occurring on a volume is a condition that would prevent the expansion of that volume. This is because the system needs to ensure data integrity and consistency during the rebuild process before any changes to the volume size can be made.

Rebuild Process: A rebuild is a process where VPLEX re-synchronizes the data across the storage volumes, typically after a disk replacement or a failure1.

Volume Expansion: Expanding a volume involves increasing its size to accommodate more data. This process requires that the volume is in a stable state without any ongoing rebuild operations1.

Data Integrity: During a rebuild, the system is focused on restoring the correct data across the storage volumes. Attempting to expand a volume during this process could lead to data corruption or loss1.

System Restrictions: VPLEX systems have built-in mechanisms to prevent administrators from performing actions that could jeopardize the system’s stability or data integrity, such as expanding a volume during a rebuild1.

Post-Rebuild Expansion: Once the rebuild process is complete and the volume is fully synchronized, the administrator can then proceed with the volume expansion1.

In summary, a rebuild currently occurring on a volume is a condition that would prevent the expansion of that volume in a Dell VPLEX system. The system must first ensure that the rebuild process is completed successfully before allowing any changes to the volume’s size.

LUNs are being provisioned from active/passive arrays to VPLEX. What is the path requirement for each VPLEX director when connecting to this type of array?

At least two paths to both the active and non-preferred controllers of each array

At least four paths to every array and storage volume

At least two paths to every array and storage volume

At least two paths to both the active and passive controllers of each array

When provisioning LUNs from active/passive arrays to VPLEX, it is essential that each VPLEX director has at least two paths to both the active and passive controllers of each array. This requirement ensures high availability and redundancy for the storage volumes being managed by VPLEX1.

Active/Passive Arrays: Active/passive arrays have one controller actively serving I/O (active) and another on standby (passive). The VPLEX system must have paths to both controllers to maintain access to the LUNs in case the active controller fails1.

Path Redundancy: Having at least two paths to both controllers from each VPLEX director provides redundancy. If one path fails, the other can continue to serve I/O, preventing disruption to the host applications1.

VPLEX Configuration: In the VPLEX configuration, paths are zoned and masked to ensure that the VPLEX directors can access the LUNs on the storage arrays. Proper zoning and masking are critical for the paths to function correctly1.

Failover Capability: The dual-path configuration allows VPLEX to perform an automatic failover to the passive controller if the active controller becomes unavailable, ensuring continuous data availability1.

Best Practices: Following the path requirement as per Dell EMC’s best practices ensures that the VPLEX system can provide the expected level of service and data protection for the provisioned LUNs1.

In summary, the path requirement for each VPLEX director when connecting to active/passive arrays is to have at least two paths to both the active and passive controllers of each array, providing the necessary redundancy and failover capabilities.

Which type of volume is subjected to high levels of I/O only during a WAN COM failure?

Distributed volume

Logging volume

Metadata volume

Virtual volume

Questions no: High I/O volume type during WAN COM failure

Verified Answer:B. Logging volume

Step by Step Comprehensive Detailed Explanation with References:During a WAN COM failure in a VPLEX Metro environment, logging volumes are subjected to high levels of I/O. This is because the logging volumes are used to store write logs that ensure data integrity and consistency across distributed volumes. These logs play a critical role during recovery processes, especially when there is a communication failure between clusters.

Role of Logging Volumes: Logging volumes in VPLEX are designed to capture write operations that cannot be immediately mirrored across the clusters due to network issues or WAN COM failures1.

WAN COM Failure: When a WAN COM failure occurs, the VPLEX system continues to write to the local logging volumes to ensure no data loss. Once the WAN COM link is restored, the logs are used to synchronize the data across the clusters1.

High I/O Levels: The high levels of I/O on the logging volumes during a WAN COM failure are due to the accumulation of write operations that need to be logged until the link is restored and the data can be synchronized1.

Recovery Process: After the WAN COM link is restored, the VPLEX system uses the data in the logging volumes to rebuild and synchronize the distributed volumes, ensuring data consistency and integrity1.

Best Practices: EMC best practices recommend monitoring the health and performance of logging volumes, especially during WAN COM failures, to ensure they can handle the increased I/O load and maintain system performance1.

In summary, logging volumes experience high levels of I/O only during a WAN COM failure as they are responsible for capturing and storing write operations until the communication between clusters can be re-established and data synchronization can occur.

What information is required to configure ESRS for VPLEX?

VPLEX Model Type

Top Level Assembly

Site ID

IP address of the Management Server public port

ESRS Gateway Account

Site ID

VPLEX configuration

Top Level Assembly

IP Address of the Management Server public port

Front-end and Back-end connectivity

IP subnets

Putty utility

Site ID

VPLEX Site Preparation Guide

VPLEX configuration

VPLEX model type

To configure EMC Secure Remote Services (ESRS) for VPLEX, certain key pieces of information are required:

ESRS Gateway Account: An account on the ESRS Gateway is necessary to enable secure communication between the VPLEX system and EMC’s support infrastructure1.

Site ID: The Site ID uniquely identifies the location of the VPLEX system and is used by EMC support to track and manage service requests1.

VPLEX Configuration: Details of the VPLEX configuration, including the number of engines, clusters, and connectivity options, are required to properly set up ESRS monitoring1.

Top Level Assembly: The Top Level Assembly number is a unique identifier for the VPLEX system that helps EMC support to quickly access system details and configuration1.

These details are essential for setting up ESRS, which allows for proactive monitoring and remote support capabilities for the VPLEX system. The ESRS configuration ensures that EMC can provide timely and effective support services.

What is a best practice when connecting a VPLEX Cluster-1 to VPLEX Cluster-2?

Create 32 zones between Cluster-1 and the Cluster-2 VPLEX cluster

Create 16 zones between Cluster-1 and the Cluster-2 VPLEX cluster

Zone every Cluster-1 director port to every Cluster-2 director port in each fabric

Zone every Cluster-1 WAN port to every Cluster-2 WAN port in each fabric

When connecting a VPLEX Cluster-1 to VPLEX Cluster-2, the best practice is to ensure that every director port in Cluster-1 is zoned to every director port in Cluster-2 within each fabric. This approach is recommended to maintain a robust and resilient storage network that can handle failover scenarios and provide continuous availability.

Zoning Directors: Zoning is a SAN best practice that isolates traffic within the fabric to specific devices. By zoning every director port from one cluster to every director port in the other cluster, you ensure that there are multiple paths for communication, which enhances redundancy and fault tolerance1.

Fabric Configuration: Each fabric should be configured separately to maintain isolation between the paths. This prevents a single point of failure from affecting all paths and allows for continued operation even if one fabric experiences issues1.

Path Redundancy: With every director port zoned to its counterpart in the other cluster, there are multiple paths for data to travel. This redundancy is crucial for VPLEX Metro’s high availability, as it allows for seamless failover between clusters1.

Continuous Availability: VPLEX is designed for continuous availability, and proper zoning is key to achieving this. The zoning configuration should support VPLEX’s ability to provide uninterrupted access to data, even in the event of hardware failures or maintenance activities1.

Best Practices Documentation: Dell EMC provides detailed documentation on VPLEX implementation and best practices, including zoning recommendations. It is important to consult these documents when planning and implementing zoning for VPLEX clusters1.

In summary, the best practice for connecting VPLEX Cluster-1 to Cluster-2 is to zone every director port to every director port in each fabric, ensuring multiple paths for communication and enhancing the overall resilience of the storage network.

Using the Storage Volume expansion method for virtual volumes built on RAID-1 or distributed RAID-1 devices, what is the maximum number of initialization processes that can run concurrently, per cluster?

100

500

250

1000

Context: The Dell VPLEX system allows for the expansion of virtual volumes to accommodate growing data storage needs without disrupting ongoing operations.

Initialization Process: When expanding storage volumes, the system undergoes initialization processes to integrate the new storage capacity effectively.

Concurrent Processes Limit: For virtual volumes built on RAID-1 or distributed RAID-1 devices, the maximum number of initialization processes that can run concurrently per cluster is 1000. This limit ensures optimal performance and resource management within the cluster.

Implications: If the limit of 1000 concurrent processes is reached, no new storage volume expansions can be initiated until some of the ongoing initialization processes are completed.

References:

Dell EMC Metro Node Administrator Guide

Once installed, how does VPLEX Cluster Witness communicate with each cluster to provide the health check heartbeats required for monitoring?

VPN Tunnel over IP to each management server

UDP over IP to each management server

Native iSCSI connections to each management server

In-band Fibre Channel to each management server

The VPLEX Cluster Witness communicates with each cluster using a VPN Tunnel over IP to the management servers. This method is used to provide the health check heartbeats required for monitoring the status of the clusters.

VPN Tunnel Creation: Initially, a VPN tunnel is established between the VPLEX Cluster Witness and each VPLEX cluster’s management server. This secure tunnel ensures that the communication for health checks is protected1.

Health Check Heartbeats: Through the VPN tunnel, the VPLEX Cluster Witness sends periodic health check heartbeats to each management server. These heartbeats are used to monitor the operational status of the clusters1.

Monitoring Cluster Health: The health check heartbeats are essential for the VPLEX Cluster Witness to determine the health of each cluster. If a heartbeat is missed, it may indicate a potential issue with the cluster1.

Failover Decisions: Based on the health check heartbeats, the VPLEX Cluster Witness can make informed decisions about failover actions if one of the clusters becomes unresponsive or encounters a failure1.

Configuration and Management: The configuration and management of the VPN tunnels and the health check mechanism are typically done through the VPLEX management console or CLI, following the best practices outlined in the Dell VPLEX Deploy Achievement documents1.

In summary, the VPLEX Cluster Witness uses a VPN Tunnel over IP to communicate with each cluster’s management server, providing the necessary health check heartbeats for continuous monitoring and ensuring high availability of the VPLEX system.

What is a consideration when performing batched data mobility jobs using the VPlexcli?

Allows for more than 25 concurrent migrations

Allows only one type of data mobility job per plan

Allows for the user to overwrite a device target with a configured virtual volume

Allows for the user to migrate an extent to a smaller target if thin provisioned

When performing batched data mobility jobs using the VPlexcli, a key consideration is that each batched mobility job plan can only contain one type of data mobility jB. This means that all the migrations within a single plan must be of the same type, such as all migrations being from one storage array to another or all being within the same array.

Creating a Mobility Job Plan: When creating a batched data mobility job plan using the VPlexcli, you initiate a plan that will contain a series of individual migration jobs1.

Job Type Consistency: Within this plan, all the jobs must be of the same type to ensure consistency and predictability in the execution of the jobs. This helps in managing resources and dependencies effectively1.

Execution of the Plan: Once the plan is created and initiated, the VPlexcli will execute each job in the order they were added to the plan. The system ensures that the resources required for each job are available and that the jobs do not conflict with each other1.

Monitoring and Completion: As the jobs are executed, their progress can be monitored through the VPlexcli. Upon completion of all jobs in the plan, the system will report the status and any issues encountered during the migrations1.

Best Practices: It is recommended to follow best practices for data mobility using VPlexcli as outlined in the Dell VPLEX Deploy Achievement documents. This includes planning migrations carefully, understanding the types of jobs that can be batched together, and ensuring that the system is properly configured for the migrations1.

In summary, when performing batched data mobility jobs using the VPlexcli, it is important to remember that only one type of data mobility job is allowed per plan. This consideration is crucial for the successful execution and management of batched data mobility jobs in a VPLEX environment.

TESTED 24 Feb 2026

Copyright © 2014-2026 DumpsTool. All Rights Reserved